days

hours

minutes

seconds

Mind The Graph Scientific Blog is meant to help scientists learn how to communicate science in an uncomplicated way.

Scientific illustration is seen as a universal language that bridges gaps across disciplines and geographies. Visual communication makes complex concepts accessible and easier to understand, but different cultural contexts and norms can significantly impact how we see, understand, and respond to visuals. That’s why it is important for illustrators, researchers, and educators to consider how […]

Artificial intelligence (AI) is transforming drug discovery at a breathtaking pace. From designing novel molecules to optimizing clinical trials, AI is ushering in a new era of precision medicine. Just this year, an AI-patented drug for obsessive-compulsive disorder entered human testing, and companies like Recursion are leveraging supercomputers like BioHive-2 to accelerate drug design. But here’s the challenge: AI’s complex outputs—think neural networks, molecular simulations, or trial data—can be daunting to communicate. Enter Mind the Graph, a game-changing platform that empowers scientists to create stunning, scientifically accurate infographics to share these breakthroughs with the world.

In this blog, we’ll explore why visualization is critical for AI-driven research, how Mind the Graph makes it easy, and how you can use it to boost the impact of your work. Let’s dive in!

Why Visualization Matters in AI-Driven Research

AI is revolutionizing pharma, but its outputs are often dense: intricate algorithms, 3D molecular models, or multi-phase trial results. For example, NVIDIA’s BioHive-2 uses billion-parameter AI models to predict molecular properties, speeding up drug development. Communicating these findings to researchers, clinicians, or even patients requires clarity and engagement.

That’s where infographics shine. Studies show that articles with visuals like graphical abstracts see a 120% increase in citations—a stat Mind the Graph users rave about. A well-crafted infographic can distill a neural network’s role in identifying a cancer drug target into a single, compelling image. It’s not just about aesthetics; it’s about making complex science accessible to journal editors, conference attendees, or the public.

How Mind the Graph Empowers Scientists

Mind the Graph is built for scientists, by scientists. With over 70,000 scientifically accurate illustrations across 80+ fields like biology, pharmacology, and bioinformatics, it’s a treasure trove for visualizing AI-driven research. Here’s why researchers love it:

Let’s walk through an example. Imagine you’re preparing a graphical abstract for a study using AI to identify a new Alzheimer’s drug target. With Mind the Graph, you can:

The result? A clear, engaging graphic that boosts your study’s visibility and impact.

Tips for Maximizing Mind the Graph

Ready to create your own AI-driven research visuals? Here are some tips:

Communicate Science with Impact

AI is reshaping drug discovery, and tools like Mind the Graph are helping scientists share these breakthroughs with clarity and impact. Whether you’re visualizing a neural network’s role in cancer drug design or a clinical trial’s outcomes, Mind the Graph’s 40,000+ illustrations and user-friendly platform make it a must-have for researchers in medicine, biology, and pharma.

Ready to elevate your research communication? Try Mind the Graph’s free plan today and create a visual for your next project. Share your infographics on X to join the conversation about AI in healthcare, or explore the Mind the Graph blog for more science communication tips. Let’s make complex science simple—and stunning.

What’s your biggest challenge in visualizing research? Share in the comments or let’s discuss how Mind the Graph can help!

In today’s fast-paced scientific landscape, publishing your research is only half the battle. The real challenge? Communicating your findings clearly and effectively so they reach and resonate with your target audience.

This is where science drawings and scientific illustrations come into play. These visual tools help simplify complex concepts, boost reader engagement, and increase the visibility and citation of your work. Whether you’re preparing a manuscript, a conference poster, or a grant proposal, science drawings can make your research more accessible and impactful.

A science drawing, also known as a scientific illustration, is a visual representation of scientific data, concepts, or methods. These visuals can take many forms, including:

These illustrations help convey complex information visually, enhancing comprehension for both experts and non-specialists alike.

Explore a variety of real-world science drawings created by researchers at MindTheGraph’s gallery for inspiration.

One of the key benefits of using science drawings in research is that they can break down complex ideas into simple, digestible visuals. Instead of lengthy descriptions, a scientific illustration can instantly show relationships, workflows, or mechanisms.

Visual elements like scientific illustrations not only make your paper more appealing but also help readers retain information better. A well-crafted science drawing can increase the time spent on your article and encourage deeper exploration.

Studies show that articles with visual content are more likely to be shared and cited. By using science drawings, you can increase your paper’s visibility across academic databases and search engines.

Science is global. Scientific illustrations serve as a universal language, making your research understandable to a broader, international audience—even those outside your immediate field.

Thanks to tools like MindTheGraph, creating high-quality science drawings is easier than ever. No design experience needed—just choose from thousands of scientifically accurate icons and templates, tailored to your research field.

Each type of science drawing helps clarify and reinforce your key findings for your target audience.

Creating scientific illustrations doesn’t have to be time-consuming or require graphic design skills. Platforms like MindTheGraph are designed specifically for scientists, offering:

Whether you’re in biology, medicine, chemistry, or environmental science, these tools allow you to produce professional science drawings quickly and efficiently.

In today’s competitive academic environment, how you present your research is just as important as the data itself. Incorporating science drawings and scientific illustrations into your papers helps you communicate more effectively, increase citations, and reach a wider audience.

Before you submit your next paper, ask yourself:

Can I use a science drawing to make this clearer?

If the answer is yes, take the opportunity to elevate your research—both in appearance and impact.

Ready to transform your research with professional scientific illustrations? Explore templates, examples, and easy-to-use tools at MindTheGraph and start creating publication-ready visuals today.

Power analysis in statistics is an essential tool for designing studies that yield accurate and reliable results, guiding researchers in determining optimal sample sizes and effect sizes. This article explores the significance of power analysis in statistics, its applications, and how it supports ethical and effective research practices.

Power analysis in statistics refers to the process of determining the likelihood that a study will detect an effect or difference when one truly exists. In other words, power analysis helps researchers ascertain the sample size needed to achieve reliable results based on a specified effect size, significance level, and statistical power.

By grasping the concept of power analysis, researchers can significantly enhance the quality and impact of their statistical studies.

The basics of power analysis in statistics revolve around understanding how sample size, effect size, and statistical power interact to ensure meaningful and accurate results. Understanding the basics of power analysis involves familiarizing yourself with its key concepts, components, and applications. Here’s an overview of these fundamentals:

Power analysis involves several critical components that influence the design and interpretation of statistical studies. Understanding these components is essential for researchers aiming to ensure that their studies are adequately powered to detect meaningful effects. Here are the key components of power analysis:

Power analysis in statistics is vital for ensuring sufficient sample size, enhancing statistical validity, and supporting ethical research practices. Here are several reasons why power analysis is important:

Power analysis is essential not only for detecting true effects but also for minimizing the risk of Type II errors in statistical research. Understanding Type II errors, their consequences, and the role of power analysis in avoiding them is crucial for researchers.

Low power in a statistical study significantly increases the risk of committing Type II errors, which can lead to various consequences, including:

Designing an efficient study is critical for obtaining valid results while maximizing resource utilization and adhering to ethical standards. This involves balancing available resources and addressing ethical considerations throughout the research process. Here are key aspects to consider when aiming for an efficient study design:

Conducting a power analysis is essential for designing statistically robust studies. Below are the systematic steps to conduct power analysis effectively.

Mind the Graph platform is a powerful tool for scientists looking to enhance their visual communication. With its user-friendly interface, customizable features, collaborative capabilities, and educational resources, Mind the Graph streamlines the creation of high-quality visual content. By leveraging this platform, researchers can focus on what truly matters—advancing knowledge and sharing their discoveries with the world.

The analysis of variance (ANOVA) is a fundamental statistical method used to analyze differences among group means, making it an essential tool in research across fields like psychology, biology, and social sciences. It enables researchers to determine whether any of the differences between means are statistically significant. This guide will explore how the analysis of variance works, its types, and why it’s crucial for accurate data interpretation.

The analysis of variance is a statistical technique used to compare the means of three or more groups, identifying significant differences and providing insights into variability within and between groups. It helps the researcher understand whether the variation in group means is greater than the variation within the groups themselves, which would indicate that at least one group mean is different from the others. ANOVA operates on the principle of partitioning total variability into components attributable to different sources, allowing researchers to test hypotheses about group differences. ANOVA is widely used in various fields such as psychology, biology, and social sciences, allowing researchers to make informed decisions based on their data analysis.

To delve deeper into how ANOVA identifies specific group differences, check out Post-Hoc Testing in ANOVA.

There are several reasons for performing ANOVA. One reason is to compare the means of three or more groups at the same time, rather than conducting a number of t-tests, which can result in inflated Type I error rates. It identifies the existence of statistically significant differences among the group means and, when there are statistically significant differences, allows further investigation to identify which particular groups differ using post-hoc tests. ANOVA also enables researchers to determine the impact of more than one independent variable, especially with Two-Way ANOVA, by analyzing both the individual effects and the interaction effects between variables. This technique also gives an insight into the sources of variation in the data by breaking it down into between-group and within-group variance, thus enabling researchers to understand how much variability can be attributed to group differences versus randomness. Moreover, ANOVA has high statistical power, meaning it is efficient for detecting true differences in means when they do exist, which further enhances the reliability of conclusions drawn. This robustness against certain violations of the assumptions, for example normality and equal variances, applies it to a wider range of practical scenarios, making ANOVA an essential tool for researchers in any field that is making decisions based upon group comparisons and furthering the depth of their analysis.

ANOVA is based on several key assumptions that must be met to ensure the validity of the results. First, the data should be normally distributed within each group being compared; this means that the residuals or errors should ideally follow a normal distribution, particularly in larger samples where the Central Limit Theorem may mitigate non-normality effects. ANOVA assumes homogeneity of variances; it is held that, if significant differences are expected between the groups, the variances among these should be about equal. Tests to evaluate this include Levene’s test. The observations also need to be independent of one another, in other words, the data gathered from one participant or experimental unit should not influence that of another. Last but not least, ANOVA is devised specifically for continuous dependent variables; the groups under analysis have to be composed of continuous data measured on either an interval or ratio scale. Violations of these assumptions can result in erroneous inferences, so it is important that researchers identify and correct them before applying ANOVA.

– Educational Research: A researcher wants to know if the test scores of students are different based on teaching methodologies: traditional, online, and blended learning. A One-Way ANOVA can help determine if the teaching method impacts student performance.

– Pharmaceutical Studies: Scientists may compare the effects of different dosages of a medication on patient recovery times in drug trials. Two-Way ANOVA can evaluate effects of dosage and patient age at once.

– Psychology Experiments: Investigators may use Repeated Measures ANOVA to determine how effective a therapy is across several sessions by assessing the anxiety levels of participants before, during, and after treatment.

To learn more about the role of post-hoc tests in these scenarios, explore Post-Hoc Testing in ANOVA.

Post-hoc tests are performed when an ANOVA finds a significant difference between the group means. These tests help determine exactly which groups differ from each other since ANOVA only reveals that at least one difference exists without indicating where that difference lies. Some of the most commonly used post-hoc methods are Tukey’s Honest Significant Difference (HSD), Scheffé’s test, and the Bonferroni correction. Each of these controls for the inflated Type I error rate associated with multiple comparisons. The choice of post-hoc test depends on variables such as sample size, homogeneity of variances, and the number of group comparisons. Proper use of post-hoc tests ensures that researchers draw accurate conclusions about group differences without inflating the likelihood of false positives.

The most common error in performing ANOVA is ignoring the assumption checks. ANOVA assumes normality and homogeneity of variance, and failure to test these assumptions may lead to inaccurate results. Another error is the performance of multiple t-tests instead of ANOVA when comparing more than two groups, which increases the risk of Type I errors. Researchers sometimes misinterpret ANOVA results by concluding which specific groups differ without conducting post-hoc analyses. Inadequate sample sizes or unequal group sizes can reduce the power of the test and impact its validity. Proper data preparation, assumption verification, and careful interpretation can address these issues and make ANOVA findings more reliable.

While both ANOVA and the t-test are used to compare group means, they have distinct applications and limitations:

There are quite a number of software packages and programming languages that can be used to perform ANOVA with each having their own features, capabilities, and suitability for varied research needs and expertise.

The most common tool widely used in academics and industries is the SPSS package, which also offers an easily user-friendly interface and the power for doing statistical computations. It also supports different kinds of ANOVA: one-way, two-way, repeated measures, and factorial ANOVA. SPSS automates much of the process from assumption checks, such as homogeneity of variance, to conducting post-hoc tests, making it an excellent choice for users who have little programming experience. It also provides comprehensive output tables and graphs that simplify the interpretation of results.

R is the open-source programming language of choice for many in the statistical community. It is flexible and widely used. Its rich libraries, for example, stats, with aov() function and car for more advanced analyses are aptly suited to execute intricate ANOVA tests. Though one needs some knowledge of programming in R, this provides much stronger facilities for data manipulation, visualization, and tailoring one’s own analysis. One can adapt their ANOVA test to a specific study and align it with other statistical or machine learning workflows. Additionally, R’s active community and abundant online resources provide valuable support.

Microsoft Excel offers the most basic form of ANOVA with its Data Analysis ToolPak add-in. The package is ideal for very simple one-way and two-way ANOVA tests, but for users without specific statistical software, it provides an option for users. Excel lacks much power for handling more complex designs or large datasets. Additionally, the advanced features for post-hoc testing are not available in this software. Hence, the tool is better suited for a simple exploratory analysis or teaching purposes rather than an elaborate research work.

ANOVA is gaining popularity under statistical analysis, especially in areas that relate to data science and machine learning. Robust functions of conducting ANOVA can be found in several libraries; some of these are very convenient. For instance, Python’s SciPy has one-way ANOVA capability within the f_oneway() function, while Statsmodels offers more complex designs involving repeated measures, etc., and even factorial ANOVA. Integration with data processing and visualization libraries like Pandas and Matplotlib enhances Python’s ability to complete workflows seamlessly for data analysis as well as presentation.

JMP and Minitab are technical statistical software packages intended for advanced data analysis and visualization. JMP is a product by SAS, which makes it user-friendly for exploratory data analysis, ANOVA, and post-hoc testing. Its dynamic visualization tools also enable the reader to understand complex relations within the data. Minitab is well known for the wide-ranging statistical procedures applied in analyzing any kind of data, highly user-friendly design, and excellent graphic outputs. These tools are very valuable for quality control and experimental design in industrial and research environments.

Such considerations may include the complexity of research design, the size of dataset, need for advanced post-hoc analyses, and even technical proficiency of the user. Simple analyses may work adequately in Excel or SPSS; the complex or large-scale research might be better suited by using R or Python for maximum flexibility and power.

To perform an ANOVA test in Microsoft Excel, you need to use the Data Analysis ToolPak. Follow these steps to ensure accurate results:

Excel’s built-in ANOVA tool does not automatically perform post-hoc tests (like Tukey’s HSD). If ANOVA results indicate significance, you may need to conduct pairwise comparisons manually or use additional statistical software.

Conclusion ANOVA stands out as an essential tool in statistical analysis, offering robust techniques to evaluate complex data. By understanding and applying ANOVA, researchers can make informed decisions and derive meaningful conclusions from their studies. Whether working with various treatments, educational approaches, or behavioral interventions, ANOVA provides the foundation upon which sound statistical analysis is built. The advantages it offers significantly enhance the ability to study and understand variations in data, ultimately leading to more informed decisions in research and beyond. While both ANOVA and t-tests are critical methods for comparing means, recognizing their differences and applications allows researchers to choose the most appropriate statistical technique for their studies, ensuring the accuracy and reliability of their findings.

Read more here!

The analysis of variance is a powerful tool, but presenting its results can often be complex. Mind the Graph simplifies this process with customizable templates for charts, graphs, and infographics. Whether showcasing variability, group differences, or post-hoc results, our platform ensures clarity and engagement in your presentations. Start transforming your ANOVA results into compelling visuals today.

Mind the Graph serves as a powerful tool for researchers who want to present their statistical findings in a clear, visually appealing, and easily interpretable way, facilitating better communication of complex data.

A comparison study is a vital tool in research, helping us analyze differences and similarities to uncover meaningful insights. This article delves into how comparison studies are designed, their applications, and their importance in scientific and practical explorations.

Comparison is how our brains are trained to learn. From our childhood we train ourselves to differentiate between items, colours, people, situations and we learn by comparing. Comparing gives us a perspective of characteristics. Comparison gives us the ability to see presence and absence of several features in a product or a process. Isn’t that true? Comparison is what leads us to the idea of what is better than the other which builds our judgement. Well, honestly in personal life comparison can lead us to judgements which can affect our belief systems, but in scientific research comparison is a fundamental principle of revealing truths.

Scientific community compares, samples, ecosystems, effect of medicines and effect of all the factors are compared against the control. That is how we reach conclusions. With this blog post we ask you to join us to learn how to design a comparative study analysis and understand the subtle truths and application of the method in our day to day scientific explorations.

Comparison studies are critical for evaluating relationships between exposures and outcomes, offering various methodologies tailored to specific research goals. They can be broadly categorized into several types, including descriptive vs. analytical studies, case-control studies, and longitudinal vs. cross-sectional comparisons. Each type of comparative inquiry has unique characteristics, advantages, and limitations.

A case-control study is a type of observational study that compares individuals with a specific condition (cases) to those without the condition (controls). This design is particularly useful for studying rare diseases or outcomes for patients.

Read more about case control study here!

| Type of Study | Description | Advantages | Disadvantages |

| Descriptive | Describes characteristics without causal inference | Simple and quick data collection | Limited in establishing relationships |

| Analytical | Tests hypotheses about relationships | Can identify associations | May require more resources |

| Case-Control | Compares cases with controls retrospectively | Efficient for rare diseases | Biases and cannot establish causality |

| Longitudinal | Observes subjects over time | Can assess changes and causal relationships | Time-consuming and expensive |

| Cross-Sectional | Measures variables at one point in time | Quick and provides a snapshot | Cannot determine causality |

Conducting a comparison study requires a structured approach to analyze variables systematically, ensuring reliable and valid results. This process can be broken down into several key steps: formulating the research question, identifying variables and controls, selecting case studies or samples, and data collection and analysis. Each step is crucial for ensuring the validity and reliability of the study’s findings.

The first step in any comparative study is to clearly define the research question. This question should articulate what you aim to discover or understand through your analysis.

Read our blog for more insights on research question!

Once the research question is established, the next step is to identify the variables involved in the study.

The selection of appropriate case studies or samples is critical for obtaining valid results.

Comparative study researchers usually have to face a crucial decision: will they adopt one group of qualitative methods, quantitative methods, or combine both of them?Qualitative Comparative Methods focus on understanding phenomena through detailed and contextual analysis.

These methods incorporate non-numerical data, including interviews, case studies, or ethnographies. It is an inquiry into patterns, themes, and narratives to extract relevant insights. For example, health care systems can be compared based on qualitative interviews with some medical professionals on patient’s care experiences. This could help to look deeper behind the “why” and “how” of seen differences, and offer an abundance of information, detailed well.

The other is Quantitative Comparative Methods, which rely on measurable, numerical data. This type of analysis uses statistical analysis to determine trends, correlations, or causal relationships between variables. Researchers may use surveys, census data, or experimental results to make objective comparisons. For example, when comparing educational outcomes between nations, standardized test scores and graduation rates are usually used. Quantitative methods give clear, replicable results that are often generalizable to larger populations, making them essential for studies that require empirical validation.

Both approaches have merits and demerits. Although qualitative research is deep and rich in context, quantitative approaches offer breadth and precision. Usually, researchers make this choice based on the aims and scope of their particular study.

The mixed-methods approach combines both qualitative and quantitative techniques in a single study, giving an integral view of the research problem. This approach capitalizes on the merits of both approaches while minimizing the respective limitations of each.In a mixed-methods design, the researcher may collect primary quantitative data to identify more general patterns and then focus on qualitative interviews to shed more light on those same patterns. For instance, a study on the effectiveness of a new environmental policy may begin with statistical trends and analysis of pollution levels. Then, through interviews conducted with policymakers and industry stake holders, the researcher explores the challenges of implementation of the policy.

There are several kinds of mixed-methods designs, such as:

The mixed-methods approach makes comparative studies more robust by providing a more nuanced understanding of complex phenomena, making it especially useful in multidisciplinary research.

Effective comparative research relies on various tools and techniques to collect, analyze, and interpret data. These tools can be broadly categorized based on their application:

Statistical Package: It can be used to make various analyses with SPSS, R, and SAS on quantitative data to have the regression analysis, ANOVA, or even a correlation study.

Qualitative Analysis Software: For qualitative data coding and analyzing, the software of NVivo and ATLAS.ti is very famous, that would help find the trends and themes.

Comparative Case Analysis (CCA): This technique systematically compares cases to identify similarities and differences, often used in political science and sociology.

Graphs and Charts: Visual representations of quantitative data make it easier to compare results across different groups or regions.

Mapping Software: Geographic Information Systems (GIS) are useful in the analysis of spatial data and, therefore, are of particular utility in environmental and policy studies.

By combining the right tools and techniques, researchers can increase the accuracy and depth of their comparative analysis so that the findings are reliable and insightful.

Ensuring validity and reliability is crucial in a comparison study, as these elements directly impact the credibility and reproducibility of results. Validity refers to the degree to which the study actually measures what it purports to measure, whereas reliability deals with the consistency and reproducibility of results. When dealing with varying datasets, research contexts, or different participant groups, the issue is maintained in these two aspects. To ensure validity, the researchers have to carefully design their study frameworks and choose proper indicators that truly reflect the variables of interest. For instance, while comparing educational outcomes between countries, using standardized metrics like PISA scores improves validity.

Reliability can be enhanced through the use of consistent methodologies and well-defined protocols for all comparison points. Pilot testing of surveys or interview guides helps identify and correct inconsistencies before full-scale data collection. Moreover, it is important that researchers document their procedures in such a way that the study can be replicated under similar conditions. Peer review and cross-validation with existing studies also enhance the strength of both validity and reliability.

Comparative studies, particularly those that span across regions or countries, are bound to be susceptible to cultural and contextual biases. Such biases occur when the researchers bring their own cultural lenses, which may affect the analysis of data in diverse contexts. To overcome this, it is necessary to apply a culturally sensitive approach. Researchers should be educated on the social, political, and historical contexts of the locations involved in the study. Collaboration with local experts or researchers is going to bring real insights and interpret the findings accordingly within the relevant framework of culture.

Language barriers also pose a risk for bias, particularly in qualitative studies. Translating surveys or interview transcripts may lead to subtle shifts in meaning. Therefore, employing professional translators and conducting back-translation—where the translated material is translated back to the original language—ensures that the original meaning is preserved. Additionally, acknowledging cultural nuances in research reports helps readers understand the context, fostering transparency and trust in the findings.

Comparability research involves large datasets and, especially when considering cross-national or longitudinal studies, poses significant challenges. Often, big data means the problems of consistency in the data, missing values, and difficulties in integration. Robust data management practice should be invested to address these challenges. SQL and Python or R for data analysis would make database management and data processing tasks much easier and more manageable.

Data cleaning is also a very important step. Researchers must check for errors, outliers, and inconsistencies in data in a systematic way. Automating cleaning can save much time and the chances of human error can be reduced. Also, data security and ethical considerations, like anonymizing personal information, become important if the datasets are large.

Effective visualization tools can also make complex data easy to understand, such as through Mind the Graph or Tableau, which help easily identify patterns and communicate results. Managing large datasets in this manner requires advanced tools, meticulous planning, and a clear understanding of the structures of data in order to ensure the integrity and accuracy of comparative research.

In conclusion, comparative studies are an essential part of scientific research, providing a structured approach to understanding relationships between variables and drawing meaningful conclusions. By systematically comparing different subjects, researchers can uncover insights that inform practices across various fields, from healthcare to education and beyond. The process begins with formulating a clear research question that guides the study’s objectives. Comparability and reliability come from valid control of the comparing variables. Good choice of case study or sample is important so that correct results are obtained through proper data collection and analysis techniques; otherwise, the findings get weak. Qualitative and quantitative research methods are feasible, where each has special advantages for studying complex issues.

However, challenges such as ensuring validity and reliability, overcoming cultural biases, and managing large datasets must be addressed to maintain the integrity of the research. Ultimately, by embracing the principles of comparative analysis and employing rigorous methodologies, researchers can contribute significantly to knowledge advancement and evidence-based decision-making in their respective fields. This post for the blog will act as a guide for people venturing into the realm of designing and conducting comparative studies, highlighting the significance of careful planning and execution to garner impactful results.

Representing findings from a comparison study can be complex. Mind the Graph offers customizable templates for creating visually compelling infographics, charts, and diagrams, making your research clear and impactful. Explore our platform today to take your comparison studies to the next level.

Acronyms in research play a pivotal role in simplifying communication, streamlining complex terms, and enhancing efficiency across disciplines. This article explores how acronyms in research improve clarity, their benefits, challenges, and guidelines for effective use.

By condensing lengthy phrases or technical jargon into shorter, easily recognizable abbreviations, acronyms save space in academic papers and presentations while making information more accessible to readers. For example, terms like “polymerase chain reaction” are commonly shortened to PCR, allowing researchers to quickly reference key methods or concepts without repeating detailed terminology.

Acronyms also promote clarity by standardizing language across disciplines, helping researchers communicate complex ideas more concisely. However, overuse or undefined acronyms can lead to confusion, making it crucial for authors to define them clearly when introducing new terms in their work. Overall, acronyms enhance the clarity and efficiency of scientific communication when used appropriately.

Acronyms help standardize language across disciplines, fostering clearer communication among global research communities. By using commonly accepted abbreviations, researchers can efficiently convey ideas without lengthy explanations. However, it’s essential to balance the use of acronyms with clarity—unfamiliar or excessive acronyms can create confusion if not properly defined.

In the context of research, acronyms condense technical or lengthy terms into single, recognizable words, simplifying complex scientific discussions. They serve as a shorthand method to reference complex or lengthy terms, making communication more efficient. Acronyms are commonly used in various fields, including research, where they simplify the discussion of technical concepts, methods, and organizations.

For example, NASA stands for “National Aeronautics and Space Administration.” Acronyms differ from initialisms in that they are pronounced as a word, while initialisms (like FBI or DNA) are pronounced letter by letter.

Examples of acronyms in research, such as DNA (Deoxyribonucleic Acid) in genetics or AI (Artificial Intelligence) in technology, highlight their versatility and necessity in scientific communication. You can check more examples below:

Acronyms help researchers communicate efficiently, but it’s essential to define them at first use to ensure clarity for readers unfamiliar with specific terms.

The use of acronyms in research offers numerous advantages, from saving space and time to improving readability and fostering interdisciplinary communication. Here’s a breakdown of their key benefits:

While acronyms offer many benefits in research, they also present several challenges that can hinder effective communication. These include:

Acronyms, while useful, can sometimes lead to misunderstandings and confusion, especially when they are not clearly defined or are used in multiple contexts. Here are two key challenges:

Many acronyms are used across different fields and disciplines, often with entirely different meanings. For example:

These overlaps can confuse readers or listeners who are unfamiliar with the specific field in which the acronym is being used. Without proper context or definition, an acronym can lead to misinterpretation, potentially altering the understanding of critical information.

Acronyms can change meaning depending on the context in which they are used, making them highly reliant on clear communication. For instance:

The same acronym can have entirely different interpretations, depending on the research area or conversation topic, leading to potential confusion. This issue becomes particularly pronounced in interdisciplinary work, where multiple fields may converge, each using the same acronym differently.

While acronyms can streamline communication, their overuse can actually have the opposite effect, making content harder to understand and less accessible. Here’s why:

When too many acronyms are used in a single piece of writing, especially without adequate explanation, it can make the content overwhelming and confusing. Readers may struggle to keep track of all the abbreviations, leading to cognitive overload. For example, a research paper filled with technical acronyms like RNN, SVM, and CNN (common in machine learning) can make it difficult for even experienced readers to follow along if these terms aren’t introduced properly or are used excessively.

This can slow down the reader’s ability to process information, as they constantly have to pause and recall the meaning of each acronym, breaking the flow of the material.

Acronyms can create a barrier for those unfamiliar with a particular field, alienating newcomers, non-experts, or interdisciplinary collaborators. When acronyms are assumed to be widely understood but are not clearly defined, they can exclude readers who might otherwise benefit from the information. For instance, acronyms like ELISA (enzyme-linked immunosorbent assay) or HPLC (high-performance liquid chromatography) are well-known in life sciences, but could confuse those outside that domain.

Overusing acronyms can thus make research feel inaccessible, deterring a broader audience and limiting engagement with the content.

Understanding how acronyms are utilized in various research fields can illustrate their importance and practicality. Here are a few examples from different disciplines:

Effective use of acronyms in research requires best practices that balance clarity and brevity, ensuring accessibility for all readers. Here are some key guidelines for the effective use of acronyms in research and communication:

After the initial definition, you can freely use the acronym throughout the rest of the document.

Mind the Graph streamlines the process of creating scientifically accurate infographics, empowering researchers to communicate their findings effectively. By combining an easy-to-use interface with a wealth of resources, Mind the Graph transforms complex scientific information into engaging visuals, helping to enhance understanding and promote collaboration in the scientific community.

Understanding the difference between incidence and prevalence is crucial for tracking disease spread and planning effective public health strategies. This guide clarifies the key differences between incidence vs prevalence, offering insights into their significance in epidemiology. Incidence measures the occurrence of new cases over a specified period, while prevalence gives a snapshot of all existing cases at a particular moment. Clarifying the distinction between these terms will deepen your understanding of how they influence public health strategies and guide critical healthcare decisions.

Incidence vs prevalence are essential epidemiological metrics, providing insights into disease frequency and guiding public health interventions. While both give valuable information about the health of a population, they are used to answer different questions and are calculated in distinct ways. Understanding the difference between incidence vs prevalence helps in analyzing disease trends and planning effective public health interventions.

Incidence measures the occurrence of new cases within a population over a specific period, highlighting the risk and speed of disease transmission. It measures how frequently new cases arise, indicating the risk of contracting the disease within a certain timeframe.

Incidence helps in understanding how quickly a disease is spreading and identifying emerging health threats. It is especially useful for studying infectious diseases or conditions with a rapid onset.

Calculating Incidence:

The formula for incidence is straightforward:

Incidence Rate=Number of new cases in a time periodPopulation at risk during the same period

Elements:

New cases: Only the cases that develop during the specified time period.

Population at risk: The group of individuals who are disease-free at the start of the time period but are susceptible to the disease.

For example, if there are 200 new cases of a disease in a population of 10,000 over the course of a year, the incidence rate would be:

200/(10,000)=0.02 or 2%

This indicates that 2% of the population developed the disease during that year.

Prevalence refers to the total number of cases of a particular disease or condition, both new and pre-existing, in a population at a specific point in time (or over a period). Unlike incidence, which measures the rate of new cases, prevalence captures the overall burden of a disease in a population, including people who have been living with the condition for some time and those who have just developed it.

Prevalence is often expressed as a proportion of the population, providing a snapshot of how widespread a disease is. It helps in assessing the extent of chronic conditions and other long-lasting health issues, allowing healthcare systems to allocate resources effectively and plan long-term care.

Calculating Prevalence:

The formula for calculating prevalence is:

Prevalence=Total number of cases (new + existing)Total population at the same time

Elements:

Total number of cases: This includes everyone in the population who has the disease or condition at a specified point in time, both new and previously diagnosed cases.

Total population: The entire group of people being studied, including both those with and without the disease.

For example, if 300 people in a population of 5,000 have a certain disease, the prevalence would be:

300/(5,000)=0.06 or 6%

This means that 6% of the population is currently affected by the disease.

Prevalence can be further classified into:

Point Prevalence: The proportion of a population affected by the disease at a single point in time.

Period Prevalence: The proportion of a population affected during a specified period, such as over a year.

Prevalence is particularly useful for understanding chronic conditions, such as diabetes or heart disease, where people live with the disease for long periods, and healthcare systems need to manage both current and ongoing cases.

While both incidence and prevalence are essential for understanding disease patterns, they measure different aspects of disease frequency. The key differences between these two metrics lie in the timeframe they reference and how they are applied in public health and research.

Incidence:

Incidence measures the number of new cases of a disease that occur within a specific population over a defined period of time (e.g., a month, a year). This means incidence is always linked to a timeframe that reflects the rate of occurrence of new cases. It shows how quickly a disease is spreading or the risk of developing a condition within a set period.

The focus is on identifying the onset of disease. Tracking new cases allows incidence to offer insight into the speed of disease transmission, which is crucial for studying outbreaks, evaluating prevention programs, and understanding the risk of contracting the disease.

Prevalence:

Prevalence, on the other hand, measures the total number of cases (both new and existing) in a population at a specific point in time or over a specified period. It gives a snapshot of how widespread a disease is, offering a picture of the disease’s overall impact on a population at a given moment.

Prevalence accounts for both the duration and the accumulation of cases, meaning that it reflects how many people are living with the condition. It is useful for understanding the overall burden of a disease, especially for chronic or long-lasting conditions.

Incidence:

Incidence is commonly used in public health and epidemiological research to study the risk factors and causes of diseases. It helps in determining how a disease develops and how fast it is spreading, which is essential for:

Incidence data helps prioritize health resources for controlling emerging diseases and can inform strategies for reducing transmission.

Prevalence:

Prevalence is widely used in health policy, planning, and resource allocation to understand the overall burden of diseases, especially chronic conditions. It is particularly valuable for:

Prevalence data supports policymakers in prioritizing healthcare services based on the total population affected, ensuring sufficient medical care and resources for both current and future patients.

Incidence measures the number of new cases of a disease occurring within a specific time frame, making it valuable for understanding disease risk and the rate of spread, while prevalence quantifies the total number of cases at a particular point in time, providing insight into the overall burden of disease and aiding in long-term healthcare planning. Together, incidence and prevalence offer complementary insights that create a more comprehensive understanding of a population’s health status, enabling public health officials to address both immediate and ongoing health challenges effectively.

A real-world example of incidence in action can be observed during an outbreak of bird flu (avian influenza) in a poultry farm. Public health officials may track the number of new bird flu cases reported among flocks each week during an outbreak. For instance, if a poultry farm with 5,000 birds reports 200 new cases of bird flu within a month, the incidence rate would be calculated to determine how quickly the virus is spreading within that population. This information is critical for health authorities to implement control measures, such as culling infected birds, enforcing quarantines, and educating farmworkers about biosecurity practices to prevent further transmission of the disease. For more information on bird flu, you can access this resource: Bird Flu Overview.

Another example of incidence in action can be seen during an outbreak of swine flu (H1N1 influenza) in a community. Public health officials may monitor the number of new cases of swine flu reported among residents each week during the flu season. For instance, if a city with a population of 100,000 reports 300 new cases of swine flu in a single month, the incidence rate would be calculated to determine how quickly the virus is spreading within that population. This information is crucial for health authorities to implement timely public health measures, such as launching vaccination campaigns, advising residents to practice good hygiene, and promoting awareness about symptoms to encourage early detection and treatment of the illness. Tracking the incidence helps guide interventions that can ultimately reduce transmission and protect the community’s health. For further insights into swine flu, you can visit this link: Swine Flu Overview.

An example of prevalence in action can be observed in the context of diabetes management. Health researchers might conduct a survey to assess the total number of individuals living with diabetes in a city of 50,000 residents at a given point in time. If they find that 4,500 residents have diabetes, the prevalence would be calculated to show that 9% of the population is affected by this chronic condition. This prevalence data is crucial for city planners and healthcare providers as it helps them allocate resources for diabetes education programs, management clinics, and support services to address the needs of the affected population effectively.

A similar application of prevalence can be seen during the COVID-19 pandemic, where understanding the number of active cases at a specific time was essential for public health planning. For more insights into how prevalence data was utilized during this time, access this example from the Public Health Agency of Northern Ireland: Prevalence Data in Action During COVID-19.

Incidence and prevalence are important for tracking disease trends and outbreaks in populations. Measuring incidence helps public health officials identify new cases of a disease over time, essential for detecting outbreaks early and understanding the dynamics of disease transmission.

For instance, a sudden increase in incidence rates of a communicable disease, such as measles, can trigger an immediate response that includes implementing vaccination campaigns and public health interventions. In contrast, prevalence provides insights into how widespread a disease is at a specific moment, allowing health authorities to monitor long-term trends and assess the burden of chronic diseases like diabetes or hypertension. Analyzing both metrics enables health officials to identify patterns, evaluate the effectiveness of interventions, and adapt strategies to control diseases effectively.

The measurement of incidence and prevalence is vital for effective resource allocation in public health. Understanding the incidence of a disease allows health authorities to prioritize resources for prevention and control efforts, such as targeting vaccinations or health education campaigns in areas experiencing high rates of new infections. Conversely, prevalence data assists public health officials in allocating resources for managing ongoing healthcare needs.

For example, high prevalence rates for mental health disorders in a community may prompt local health systems to increase funding for mental health services, such as counseling or support programs. Overall, these measures enable policymakers and healthcare providers to make informed decisions regarding where to direct funding, personnel, and other resources to address the most pressing health issues effectively, ensuring that communities receive the support they need.

Mind the Graph platform empowers scientists to create scientifically accurate infographics in just minutes. Designed with researchers in mind, it offers a user-friendly interface that simplifies the process of visualizing complex data and ideas. With a vast library of customizable templates and graphics, Mind the Graph enables scientists to effectively communicate their research findings, making them more accessible to a broader audience.

In today’s fast-paced academic environment, time is of the essence, and the ability to produce high-quality visuals quickly can significantly enhance the impact of a scientist’s work. The platform not only saves time but also helps improve the clarity of presentations, posters, and publications. Whether for a conference, journal submission, or educational purposes, Mind the Graph facilitates the transformation of intricate scientific concepts into engaging visuals that resonate with both peers and the general public.

Mitigating the placebo effect is a critical aspect of clinical trials and treatment protocols, ensuring more accurate and reliable research outcomes. This phenomenon can significantly influence patient outcomes and skew research results, leading to misleading conclusions about the efficacy of new interventions. By recognizing the psychological and physiological mechanisms behind the placebo effect, researchers and clinicians can implement effective strategies to minimize its impact.

This guide provides practical insights and evidence-based approaches to help in mitigating the placebo effect, ensuring more accurate and reliable outcomes in both clinical research and patient care.

Mitigating the placebo effect starts with understanding its mechanisms, which cause perceived or actual improvements due to psychological and contextual factors rather than active treatment. This response can be triggered by various factors, including the patient’s expectations, the physician’s behavior, and the context in which the treatment is administered.

The placebo effect is a psychological phenomenon wherein a patient experiences a perceived or actual improvement in their condition after receiving a treatment that is inert or has no therapeutic value. This effect is not due to the treatment itself but rather arises from the patient’s beliefs, expectations, and the context in which the treatment is administered. Placebos can take various forms, including sugar pills, saline injections, or even sham surgeries, but they all share the characteristic of lacking an active therapeutic component.

The placebo effect operates through several interconnected mechanisms that influence patient outcomes:

The placebo effect can lead to significant changes in patient outcomes, including:

The placebo effect plays a critical role in the design and interpretation of clinical trials. Researchers often use placebo-controlled trials to establish the efficacy of new treatments. By comparing the effects of an active intervention with those of a placebo, researchers can determine whether the observed benefits are due to the treatment itself or the psychological and physiological responses associated with the placebo effect.

The placebo effect has significant implications for the evaluation of treatments in clinical practice. Its influence extends beyond clinical trials, affecting how healthcare providers assess the efficacy of interventions and make treatment decisions.

Mitigating placebo effect is essential for ensuring that clinical trials and treatment evaluations yield accurate and reliable results. Here are several strategies that researchers and clinicians can employ to minimize the impact of the placebo effect:

Effective trial design is critical for minimizing the placebo effect and ensuring that clinical trials yield valid and reliable results. Two fundamental components of trial design are the use of control groups and the implementation of blinding techniques.

Control groups serve as a baseline for comparison, allowing researchers to assess the true effects of an intervention while accounting for the placebo effect.

Blinding techniques are critical for reducing bias and ensuring the integrity of clinical trials.

Effective communication with patients is essential for managing their expectations and understanding the treatment process. Clear and open dialogue can help mitigate the placebo effect and foster a trusting relationship between healthcare providers and patients.

Mitigating the placebo effect plays a vital role in enhancing healthcare outcomes and ensuring accurate evaluation of new treatments in clinical settings. By applying strategies to manage the placebo response, healthcare providers can enhance treatment outcomes, improve patient satisfaction, and conduct more reliable clinical research.

Understanding the strategies used to mitigate the placebo effect in clinical research can provide valuable insights for future studies and healthcare practices. Here, we highlight a specific clinical trial example and discuss the lessons learned from past research.

Study: The Vioxx Clinical Trial (2000)

FDA Vioxx Questions and Answers

To mitigate the placebo effect and enhance patient outcomes, healthcare providers can adopt practical strategies and ensure thorough training for medical staff.

Mind the Graph empowers scientists to effectively communicate their research through engaging and informative visuals. With its user-friendly interface, customization options, collaboration features, and access to science-specific resources, the platform equips researchers with the tools they need to create high-quality graphics that enhance understanding and engagement in the scientific community.

Correlational research is a vital method for identifying and measuring relationships between variables in their natural settings, offering valuable insights for science and decision-making. This article explores correlational research, its methods, applications, and how it helps uncover patterns that drive scientific progress.

Correlational research differs from other forms of research, such as experimental research, in that it does not involve the manipulation of variables or establish causality, but it helps reveal patterns that can be useful for making predictions and generating hypotheses for further study. Examining the direction and strength of associations between variables, correlational research offers valuable insights in fields such as psychology, medicine, education, and business.

As a cornerstone of non-experimental methods, correlational research examines relationships between variables without manipulation, emphasizing real-world insights. The primary goal is to determine if a relationship exists between variables and, if so, the strength and direction of that relationship. Researchers observe and measure these variables in their natural settings to assess how they relate to one another.

A researcher might investigate whether there is a correlation between hours of sleep and student academic performance. They would gather data on both variables (sleep and grades) and use statistical methods to see if a relationship exists between them, such as whether more sleep is associated with higher grades (a positive correlation), less sleep is associated with higher grades (a negative correlation), or if there is no significant relationship (zero correlation).

Identify Relationships Between Variables: The primary goal of correlational research is to identify relationships between variables, quantify their strength, and determine their direction, paving the way for predictions and hypotheses. Identifying these relationships allows researchers to uncover patterns and associations that may take time to be obvious.

Make Predictions: Once relationships between variables are established, correlational research can help make informed predictions. For instance, if a positive correlation between academic performance and study time is observed, educators can predict that students who spend more time studying may perform better academically.

Generate Hypotheses for Further Research: Correlational studies often serve as a starting point for experimental research. Uncovering relationships between variables provides the foundation for generating hypotheses that can be tested in more controlled, cause-and-effect experiments.

Study Variables That Cannot Be Manipulated: Correlational research allows for the study of variables that cannot ethically or practically be manipulated. For example, a researcher may want to explore the relationship between socioeconomic status and health outcomes, but it would be unethical to manipulate someone’s income for research purposes. Correlational studies make it possible to examine these types of relationships in real-world settings.

Ethical Flexibility: Studying sensitive or complex issues where experimental manipulation is unethical or impractical becomes possible through correlational research. For example, exploring the relationship between smoking and lung disease cannot be ethically tested through experimentation but can be effectively examined using correlational methods.

Broad Applicability: This type of research is widely used across different disciplines, including psychology, education, health sciences, economics, and sociology. Its flexibility allows it to be applied in diverse settings, from understanding consumer behavior in marketing to exploring social trends in sociology.

Insight into Complex Variables: Correlational research enables the study of complex and interconnected variables, offering a broader understanding of how factors like lifestyle, education, genetics, or environmental conditions are related to certain outcomes. It provides a foundation for seeing how variables may influence one another in the real world.

Foundation for Further Research: Correlational studies often spark further scientific inquiry. While they cannot prove causality, they highlight relationships worth exploring. Researchers can use these studies to design more controlled experiments or delve into deeper qualitative research to better understand the mechanisms behind the observed relationships.

No Manipulation of Variables

One key difference between correlational research and other types, such as experimental research, is that in correlational research, the variables are not manipulated. In experiments, the researcher introduces changes to one variable (independent variable) to see its effect on another (dependent variable), creating a cause-and-effect relationship. In contrast, correlational research only measures the variables as they naturally occur, without interference from the researcher.

Causality vs. Association

While experimental research aims to determine causality, correlational research does not. The focus is solely on whether variables are related, not whether one causes changes in the other. For example, if a study shows that there is a correlation between eating habits and physical fitness, it doesn’t mean that eating habits cause better fitness, or vice versa; both might be influenced by other factors such as lifestyle or genetics.

Direction and Strength of Relationships

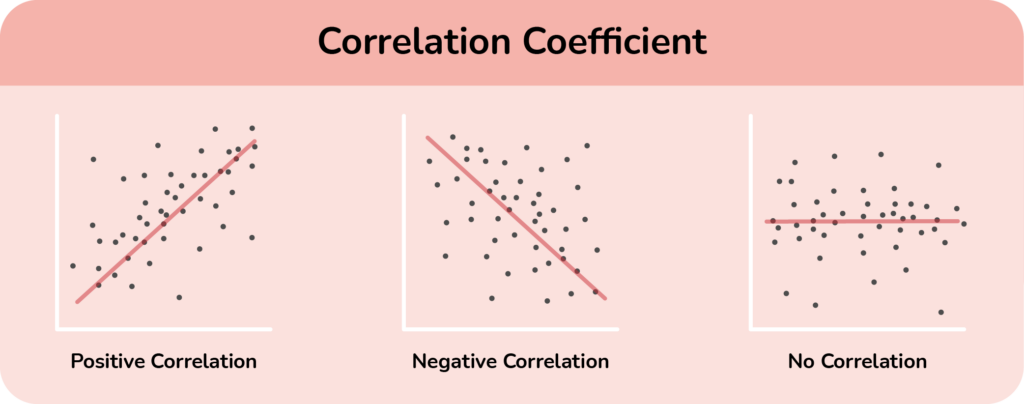

Correlational research is concerned with the direction (positive or negative) and strength of relationships between variables, which is different from experimental or descriptive research. The correlation coefficient quantifies this, with values ranging from -1 (perfect negative correlation) to +1 (perfect positive correlation). A correlation close to zero implies little to no relationship. Descriptive research, in contrast, focuses more on observing and describing characteristics without analyzing relationships between variables.

Flexibility in Variables

Unlike experimental research which often requires precise control over variables, correlational research allows for more flexibility. Researchers can examine variables that cannot be ethically or practically manipulated, such as intelligence, personality traits, socioeconomic status, or health conditions. This makes correlational studies ideal for examining real-world conditions where control is impossible or undesirable.

Exploratory Nature

Correlational research is often used in the early stages of research to identify potential relationships between variables that can be explored further in experimental designs. In contrast, experiments tend to be hypothesis-driven, focusing on testing specific cause-and-effect relationships.

A positive correlation occurs when an increase in one variable is associated with an increase in another variable. Essentially, both variables move in the same direction—if one goes up, so does the other, and if one goes down, the other decreases as well.

Examples of Positive Correlation:

Height and weight: In general, taller people tend to weigh more, so these two variables show a positive correlation.

Education and income: Higher levels of education are often correlated with higher earnings, so as education increases, income tends to increase as well.

Exercise and physical fitness: Regular exercise is positively correlated with improved physical fitness. The more frequently a person exercises, the more likely they are to have better physical health.

In these examples, the increase of one variable (height, education, exercise) leads to an increase in the related variable (weight, income, fitness).

A negative correlation occurs when an increase in one variable is associated with a decrease in another variable. Here, the variables move in opposite directions—when one rises, the other falls.

Examples of Negative Correlation:

Alcohol consumption and cognitive performance: Higher levels of alcohol consumption are negatively correlated with cognitive function. As alcohol intake increases, cognitive performance tends to decrease.

Time spent on social media and sleep quality: More time spent on social media is often negatively correlated with sleep quality. The longer people engage with social media, the less likely they are to get restful sleep.

Stress and mental well-being: Higher stress levels are often correlated with lower mental well-being. As stress increases, a person’s mental health and overall happiness may decrease.

In these scenarios, as one variable increases (alcohol consumption, social media use, stress), the other variable (cognitive performance, sleep quality, mental well-being) decreases.

A zero correlation means that there is no relationship between two variables. Changes in one variable have no predictable effect on the other. This indicates that the two variables are independent of one another and that there is no consistent pattern linking them.

Examples of Zero Correlation:

Shoe size and intelligence: There is no relationship between the size of a person’s shoes and their intelligence. The variables are entirely unrelated.

Height and musical ability: Someone’s height has no bearing on how well they can play a musical instrument. There is no correlation between these variables.

Rainfall and exam scores: The amount of rainfall on a particular day has no correlation with the exam scores students achieve in school.

In these cases, the variables (shoe size, height, rainfall) do not impact the other variables (intelligence, musical ability, exam scores), indicating a zero correlation.

Correlational research can be conducted using various methods, each offering unique ways to collect and analyze data. Two of the most common approaches are surveys and questionnaires and observational studies. Both methods allow researchers to gather information on naturally occurring variables, helping to identify patterns or relationships between them.

How They Are Used in Correlational Studies:

Surveys and questionnaires gather self-reported data from participants about their behaviors, experiences, or opinions. Researchers use these tools to measure multiple variables and identify potential correlations. For example, a survey might examine the relationship between exercise frequency and stress levels.

Benefits:

Efficiency: Surveys and questionnaires enable researchers to gather large amounts of data quickly, making them ideal for studies with big sample sizes. This speed is especially valuable when time or resources are limited.

Standardization: Surveys ensure that every participant is presented with the same set of questions, reducing variability in how data is collected. This enhances the reliability of the results and makes it easier to compare responses across a large group.

Cost-effectiveness: Administering surveys, particularly online, is relatively inexpensive compared to other research methods like in-depth interviews or experiments. Researchers can reach wide audiences without significant financial investment.

Limitations:

Self-report bias: Since surveys rely on participants’ self-reported information, there’s always a risk that responses may not be entirely truthful or accurate. People might exaggerate, underreport, or provide answers they think are socially acceptable, which can skew the results.

Limited depth: While surveys are efficient, they often capture only surface-level information. They can show that a relationship exists between variables but may not explain why or how this relationship occurs. Open-ended questions can offer more depth but are harder to analyze on a large scale.

Response rates: A low response rate can be a major issue, as it reduces the representativeness of the data. If those who respond differ significantly from those who don’t, the results may not accurately reflect the broader population, limiting the generalizability of the findings.

Process of Observational Studies:

In observational studies, researchers observe and record behaviors in natural settings without manipulating variables. This method helps assess correlations, such as observing classroom behavior to explore the relationship between attention span and academic engagement.

Effectiveness:

Benefits:

Limitations:

Several statistical techniques are commonly used to analyze correlational data, allowing researchers to quantify the relationships between variables.

Correlation Coefficient:

The correlation coefficient is a key tool in correlation analysis. It is a numerical value that ranges from -1 to +1, indicating both the strength and direction of the relationship between two variables. The most widely used correlation coefficient is Pearson’s correlation, which is ideal for continuous, linear relationships between variables.

+1 indicates a perfect positive correlation, where both variables increase together.

-1 indicates a perfect negative correlation, where one variable increases as the other decreases.

0 indicates no correlation, meaning there is no observable relationship between the variables.

Other correlation coefficients include Spearman’s rank correlation (used for ordinal or non-linear data) and Kendall’s tau (used for ranking data with fewer assumptions about the data distribution).

Scatter Plots:

Scatter plots visually represent the relationship between two variables, with each point corresponding to a pair of data values. Patterns within the plot can indicate positive, negative, or zero correlations. To explore scatter plots further, visit: What is a Scatter Plot?

Regression Analysis:

While primarily used for predicting outcomes, regression analysis aids in correlational studies by examining how one variable may predict another, providing a deeper understanding of their relationship without implying causation. For a comprehensive overview, check out this resource: A Refresher on Regression Analysis.

The correlation coefficient is central to interpreting results. Depending on its value, researchers can classify the relationship between variables:

Strong positive correlation (+0.7 to +1.0): As one variable increases, the other also increases significantly.

Weak positive correlation (+0.1 to +0.3): A slight upward trend indicates a weak relationship.

Strong negative correlation (-0.7 to -1.0): As one variable increases, the other decreases significantly.

Weak negative correlation (-0.1 to -0.3): A slight downward trend, where one variable slightly decreases as the other increases.

Zero correlation (0): No relationship exists; the variables move independently.

One of the most crucial points when interpreting correlational results is avoiding the assumption that correlation implies causation. Just because two variables are correlated does not mean one causes the other. There are several reasons for this caution:

Third-Variable Problem:

A third, unmeasured variable may be influencing both correlated variables. For example, a study might show a correlation between ice cream sales and drowning incidents. However, the third variable—temperature—explains this relationship; hot weather increases both ice cream consumption and swimming, which could lead to more drownings.

Directionality Problem:

Correlation does not indicate the direction of the relationship. Even if a strong correlation is found between variables, it’s not clear whether variable A causes B, or B causes A. For example, if researchers find a correlation between stress and illness, it could mean stress causes illness, or that being ill leads to higher stress levels.

Coincidental Correlation:

Sometimes, two variables may be correlated purely by chance. This is known as a spurious correlation. For example, there might be a correlation between the number of movies Nicolas Cage appears in during a year and the number of drownings in swimming pools. This relationship is coincidental and not meaningful.

Correlational research is used to explore relationships between behaviors, emotions, and mental health. Examples include studies on the link between stress and health, personality traits and life satisfaction, and sleep quality and cognitive function. These studies help psychologists predict behavior, identify risk factors for mental health issues, and inform therapy and intervention strategies.

Businesses leverage correlational research to gain insights into consumer behavior, enhance employee productivity, and refine marketing strategies. For instance, they may analyze the relationship between customer satisfaction and brand loyalty, employee engagement and productivity, or advertising expenditure and sales growth. This research supports informed decision-making, resource optimization, and effective risk management.

In marketing, correlational research helps identify patterns between customer demographics and buying habits, enabling targeted campaigns that improve customer engagement.

A significant challenge in correlational research is the misinterpretation of data, particularly the false assumption that correlation implies causation. For instance, a correlation between smartphone use and poor academic performance might lead to the incorrect conclusion that one causes the other. Common pitfalls include spurious correlations and overgeneralization. To avoid misinterpretations, researchers should use careful language, control for third variables, and validate findings across different contexts.

Ethical concerns in correlational research include obtaining informed consent, maintaining participant privacy, and avoiding bias that could lead to harm. Researchers must ensure participants are aware of the study’s purpose and how their data will be used, and they must protect personal information. Best practices involve transparency, robust data protection protocols, and ethical review by an ethics board, particularly when working with sensitive topics or vulnerable populations.

Mind the Graph is a valuable platform that aids scientists in effectively communicating their research through visually appealing figures. Recognizing the importance of visuals in conveying complex scientific concepts, it offers an intuitive interface with a diverse library of templates and icons for creating high-quality graphics, infographics, and presentations. This customization simplifies the communication of intricate data, enhances clarity, and broadens accessibility to diverse audiences, including those outside the scientific community. Ultimately, Mind the Graph empowers researchers to present their work in a compelling manner that resonates with stakeholders, from fellow scientists to policymakers and the general public. Visit our website for more information.

Learning how to prepare a thesis proposal is the first step toward crafting a research project that is both impactful and academically rigorous. Preparing a thesis proposal begins with a fine idea. Preparing a thesis proposal sounds like preparing just a document at the first look, but it is much more than that. This article will guide you through the essential steps of how to prepare a thesis proposal, ensuring clarity, structure, and impact.

The proposal document is your gateway to any research program and a guideline document for you to follow throughout the program. So, understanding how to prepare a thesis proposal begins with finding the right research question. Isn’t it? For an individual to reach that inspirational question to conduct research in any field helps to navigate the path of their future.

We believe all the scientists reading this blog post would agree that the inspiration for research can come to you at any time and anywhere. Once you have decided that you want to work in the field of science to unleash the truths of nature you must keep your mind open for ideas. This openness towards receiving ideas and looking into facts neutrally will help you build the first phase of your thesis proposal. Having said that, let us dive into the subject and learn components required to build a compelling thesis proposal.