We’ve all seen words like ‘ground-breaking’, ‘revolutionary’, and ‘life-changing’ being thrown around to describe various scientific publications. But how exactly do we measure the magnitude of measure impact of a scientific piece? That’s where the science impact factor comes in. Dive with me into this informative journey as we discuss, dissect, and delve deeper into understanding this essential instrument used in research assessment.

Introduction to Science Impact Factor

Definition and Concept of Science Impact Factor

At its core, the Science Impact Factor (SIF) is a metric that indicates the average number of citations an article published in a specific journal receives within a certain timeframe. Originally introduced by Eugene Garfield at the Institute for Scientific Information (ISI), this measurement tool has slowly become embedded within academic spheres.

The idea behind SIF revolves around quantifying the influence or ‘impact’ of academic journals within their corresponding fields. Essentially, it is one way to rank these outlets based on their perceived relative importance, amongst peers.

History and Development of Science Impact Factor

The history of SIF harks back to 1963 when Dr Eugene Garfield conceived it merely as an aid for librarians to select which scholarly journals that should be included in library collections. However, its utility soon expanded beyond libraries.

In essence, researchers began using it as a measuring stick for the prestige linked with publishing in certain journals. As such, over time, it developed from being just another statistic into an emblem representing scientific authority.

Yet, despite its vital role today, remember that it was not originally intended for this purpose; hence some criticism does exist on using it as such – but more on that later!

Importance and Significance of Science Impact Factor in The Scientific Community

Amongst peers in academic circles, having their work heavily cited is akin to winning discerning nods of approval – reinforcing the significance they command within their discipline. Consequently, higher science impact factor journals are often regarded as more authoritative owing to their larger citation count.

Moreover, SIF also influences career prospects for researchers. Promotions and grants often take into account individuals’ publication record, which includes the ranking of journals where their work appears. Consequently, SIF has become a crucial piece in the puzzle of academic recognition and progression.

However, while it holds visible importance, it’s not an impeccable measure. The ensuing parts will delve deeper into understanding how this tool calculates impact, its various uses, potential limitations, and future implications within the scientific community. So stay tuned!

Calculation and Evaluation of Science Impact Factor

Under this section, we delve into the precise mechanisms surrounding the computation of the science impact factor. Also, we unravel what considerations come into play during its calculation and how a journal’s impact factor is ultimately determined.

Methodology and Formula for Calculating the Science Impact Factor

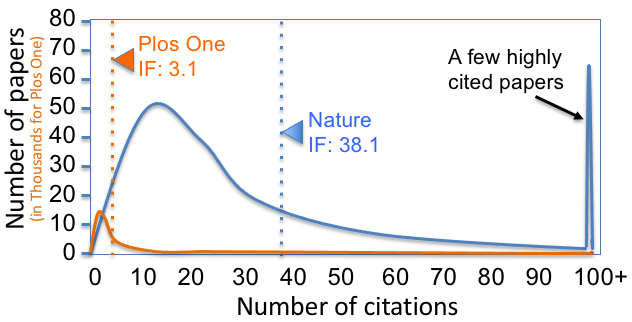

The science impact factor is determined by an undeniably simple yet extremely potent mathematical formula — devised many decades ago to measure a journal’s influence in academic circles. In essence, it represents the average citation rate that the articles published in a journal receive within their first two years.

Here is how it works: The total number of citations received by all items (mainly research papers) published in a specific scientific journal during the preceding two years is divided by the total count of said items produced within that particular year during that timeframe. This gives us the annual science impact factor.

For example, if Journal Z had 100 articles last year and they were cited 200 times this year, then its annual impact factor would be 200/100, which equals 2.0.

Simply put:

Science Impact Factor = (Citations received in Year X)/(Articles published in Year X-1 or X-2)

Factors Considered in Calculating the Science Impact Factor

While calculating the science impact factor may seem fairly straightforward, several factors at play need to be taken into account:

- Scope and size of articles: Firstly, not all items from a journal count towards this equation — only original research papers and review articles do.

- Citation sources: Secondly, the citations themselves could emanate from any indexed resource on Clarivate Analytics’ Web of Science platform— not just those produced within exactly the preceding two years.

- Publication type diversity: And finally, since different kinds of publication collectively contribute to an overall count— including book reviews or case studies that rarely net citations— they can potentially skew the resultant figure.

All these factors combine to form a nuanced understanding of how much real “impact” a journal has in its field.

Evaluation Process for Determining the Science Impact Factor of a Journal

The evaluation procedure is steadfastly helmed by Clarivate Analytics, the organization currently responsible for computing and distributing annual science impact factors.

The process gleans data from thousands of academic and medical journals alone, which calls for stringent standardization practices to ensure credibility and consistency. These include:

- Rigorously tracking all publishable materials alongside their inbound citations using Web Of Science.

- Harmonizing calculations across diverse scientific domains.

- Factoring time-criticality into the analysis — only considering articles or reviews published within two years prior.

Apart from garnering commendation as an intuitive method to gauge journal prestige, this system also assists bibliometricians and researchers in comparing journal citation reports and patterns across disciplines, fuelling smarter publishing decisions while fostering enhanced clarity in academia.

Uses and Applications of Science Impact Factor

As we delve deeper into the topic, it’s crucial to understand the various purposes associated with the science impact factor. Its significance spans from journal assessment, through dictating academic publishing decisions, and even affects funding considerations by agencies. The appreciable influence of journal impact factor doesn’t stop there; it also plays a critical role in delineating career trajectories for researchers.

Importance of Science Impact Factor in Assessing Journal Quality

In the realm of scientific journals, quality trumps fame by one to zero. And here is where the term ‘science impact factor’ displays its paramount import. This value serves as an indicator reflecting how often papers from a specific journal get cited within science magazines their first two years post-publication. Essentially, higher impact factors denote a more influential role that these journals play within their respective scientific discipline.

A study published in PLoS ONE corroborates the aforementioned points, elucidating that the most prestigious scientific journals manifest higher journal impact factors[^1^]. These insights effectively validate that when it comes to assessing journal quality, ‘higher science impact factor equals better’.

Influence of Science Impact Factor on Publishing Decisions by Researchers and Authors

The domino effect propagates further onto influencing decisions pertaining to research publication venues. Since more citations tend to signify higher utility and greater recognition amongst peers[^2^], authors often opt for publications revealing optimum science impact factors.

How does this occur? By inducing grab-and-hold interest in researchers eyeing a desirable boost in their citation count down the line: an essential aspect for accelerating academic progression and reputation.

Applications of Science Impact Factor in Funding Decisions by Granting Agencies

Notable granting agencies utilize various metrics to drive their decision-making processes towards propitious ventures only — and indeed, you guessed it right! One such metric happens to be none other than our focal point: the science impact factor.

Why so? Several studies have revealed some correlation between high-impact factor journals and articles of superior quality or value[^3^]. Consequently, these funding institutions have been known to veer towards researchers whose work is frequently cited by peer reviewers, i.e., published in high-impact factor journals.

Related article: Proven Grant Writing Tips: Boost Your Funding Success

Impact of Science Impact Factor on Career Advancement for Researchers

The benefits reaped from superior science impact factors influence the career advancement opportunities available for researchers too. Not only does publishing in high-impact journals act as a catalyst for their scientific reputation, but it also enhances possibilities of employment within prestigious research institutions[^4^].

Everry incremental step up the ladder can make all the difference between securing tenure at a top university or fading into academic obscurity. Indeed, it’s an intense competition out there in the scholarly world, and having your research highlighted by virtue of a higher citation count can echo loudly across academia — courtesy of remarkable science impact factors!

[^1^]: PLoS ONE: Prestige versus Impact [^2^]: Journal of Informetrics: Does quantity lead to more citations? [^3^]: BMC Medical Research Methodology: Impact factor correlations with article quality [^4^]: Nature Careers: Publish-or-perish pressure steers young researchers away from innovative projects

Criticism and Limitations of Science Impact Factor

Inapplicability of the Impact Factor to Individual Researchers or Articles

The science impact factor, while designed to assess the quality and relevance of a scientific journal, is often misapplied at the individual article or researcher level. Critics argue that it fails to accurately mirror an individual’s research impact due to several reasons:

- Not all articles published in high-impact factor journals are impactful. Some may never be cited.

- Research contributions differ widely within multi-author papers but aren’t visible when using average measures like the impact factor.

Therefore, evaluating a scientist’s work based on a journal’s impact factor can lead to misrepresented importance or neglect of significant research.

Differences in Impact Factor across Different Scientific Disciplines

Interestingly, the value of a science impact factor itself varies across disciplines, causing another layer of bias. Let me explain why:

- Citation practices vary from field to field. An astronomy paper might have more references than one in mathematics leading to higher citation rates.

- The potential readership differs among subjects affecting the number of citations received.

These differences make cross-discipline comparison using just the science impact factor nearly impractical.

Critiques Regarding the Correlation between Impact Factor and Research Quality

Critics also contest whether there exists any direct relationship between science impact factor and research quality. This question arises due to:

- Prevalence of ‘salami slicing,’ where authors divide their study into multiple papers to boost publication count.

- Gaming tactics such as excessive self-citations by researchers and editorial coercive citations.

Both factors inflate citation rates and hence enhance the science impact factor without improving actual research quality.

Impact of Editorial Policies on Accuracy of Impact Factor Calculations

Lastly, certain editorial policies also influence a journal’s science impact factor which calls its objectivity into further question:

- Journals tend to preferentially publish review articles knowing they get cited more frequently.

- Rejection of articles predicted to get fewer citations boosts the impact factor.

Such calculated deviations can distort the actual value, making it a less reliable tool for judging the intrinsic worth of published studies.

In light of these criticisms, I’d urge readers not to regard science impact factors as an absolute indicator. It’s crucial to recognize their limitations and use them in conjunction with other tools when assessing research contributions. We need a more holistic approach that incorporates aspects like systematic reviews, qualitative assessments, societal impacts and altmetrics measurements.

As we navigate this complex debate around science impact factors remember – the emphasis should always remain on encouraging high-quality, ethical research irrespective of metrics. That is indeed the soul of scientific advancement!

Alternative Measures of Scientific Impact

While the science impact factor has been a prominent tool to assess scientific impact, it is not the only one. Several others have surfaced in recent years to provide more nuanced and comprehensive evaluations.

Other Metrics Used to Assess Scientific Impact (e.g., h-index, altmetrics)

One widely accepted alternative is the h-index, developed by Jorge Hirsch. The h-index measures an author’s productivity and citation impact compared to journals. Scholars with an h-index of ‘n’ have published ‘n’ papers with at least ‘n’ citations each. This metric sidesteps some limitations of the science impact factor as it accounts for both the quantity and quality of work produced by a researcher over time.

Another approach gaining ground is altmetrics – short for alternative metrics. This system goes beyond traditional citation-based metrics, capturing online engagement with research outputs across various digital platforms such as reference managers, social media networks, news outlets, blogs, and policy documents.

Furthermore, the Eigenfactor® Score considers a journal’s overall scientific significance based on its total influence rather than only considering the average number of citations per article like in the Science Impact Factor.

Strengths and Weaknesses of Alternative Measures Compared to The Science Impact Factor

As Einstein once said: “Not everything that can be counted counts, and not everything that counts can be counted.” These alternatives to science impact factor each offer their strengths but also invite shortcomings.

The strength of the h-index lies in its capacity to gauge an individual scientist’s lasting contribution rather than temporary popularity. However, it cannot differentiate between active or dormant scientists if both have similar publication history.

Altmetrics takes advantage of modern data sources for a broader evaluation scope, reflecting immediate societal impacts often excluded from traditional metrics. Its weakness resides in its susceptibility to manipulation; plus these social engagement indicators may not necessarily reflect scholarly importance.

Eigenfactor®, through its nature-dependent scoring models, offers insight into journal prestige and the multi-dimensional influence of scientific publications bringing multidiscipline and size neutrality. Nevertheless, despite such sophisticated models, Eigenfactor® remains vulnerable to self-citation practices.

Therefore, no single measure is universally valid or foolproof. Each complements others by considering aspects overlooked in other models, representing a mosaic of insights into the multifaceted nature of scientific impact. A diverse metric toolkit can provide a more comprehensive picture than any single index serving as a reminder that good science transcends numbers.

Institutional Responses to Criticism of the Science Impact Factor

Efforts by Institutions and Organizations to Address the Limitations of Impact Factor

Amid growing criticism surrounding the reliability and impartiality of science impact factor, remarkable strides have been made by various institutions and organizations in identifying its limitations. For instance, the research community has seen increased endeavours into disserting whether this rating truly mirrors a journal’s prestige or simply casts an illusion.

To put it simply, there is a unanimous acknowledgement that placing excessive reliance on science impact factors might compromise scientific ingenuity and quality. It needs special mention here, the pioneering San Francisco Declaration on Research Assessment (DORA) which called for a more holistic evaluation methodology inclusive of factors beyond citation count alone.

Additionally, institutions like The Wellcome Trust and UK Research & Innovation (UKRI) are spearheading reforms to combat these flaws. Their objectives include fostering responsible use of metrics in funding decisions and encouraging ethical practices amongst researchers aiming for higher impact factors.

Implementing More Comprehensive Evaluation Systems for Research Assessment

The critique around the science impact factor catalysed audacious changes in research evaluation systems across global scientific domains. There is an increasing trend towards adopting multi-dimensional methodologies that intend to encapsulate a comprehensive view of research efficacy beyond just bibliometric measures.

Semantic Scholar’s AI Score is one such method that uses machine-learning algorithms to gauge a paper’s impact while considering several key elements such as novelty, presentation clarity, scientific soundness, etc.

Another compelling alternative comes from Publish or Perish software which accords equal importance to both heavily cited papers and those with fewer citations but impactful content nonetheless. This alleviates unfair biases ingrained in traditional methods.

Moreover, organisations are moving towards close scrutiny on merits besides public engagement; academic mentoring; policy shaping along with the applicant’s actionable plan to foster inclusivity in science through outreach programs augmenting their publication track record signifying their commitment to enhance future scientific progress.

As science impact factor continues to spark debates, more comprehensive and equitable systems like these are a step in the right direction. This novel trend catalysed improvements ensuring science progression hinges on well-rounded assessments rather than being confined by singular metrics. These endeavours, thus, pave an innovative path for scientific research’s future.

Ethical Considerations Related to Science Impact Factor

An important aspect of the scientific milieu, and one that cannot be stressed enough, involves ensuring ethical practices while dealing with the science impact factor. This critical metric comes with its set of challenges which includes issues surrounding gaming the system for better factors, publication bias affecting calculations, and difficulties in maintaining transparency as well as fairness in the assessment process.

Issues in Gaming the System for Higher Impact Factors

The pressure to publish high-impact research can sometimes shadow good scientific conduct. Unfortunately, this has given rise to some unscrupulous practices aimed at artificially inflating a journal average article’s impact factor.

One such illicit practice is “citation stacking,” where multiple authors agree to cite each other’s work in an effort to increase their collective impact factors. Similarly, editors may encourage or even insist on citing articles from their own journals—a tactic known as “self-citation” —to inflate the numbers.

While these actions may initially boost a journal’s ranking or an author’s reputation, they ultimately undermine the integrity of both scholarly publishing and science—leading us further away from genuine attempts at advancing knowledge.

Publication Bias and its Impact on the Accuracy of Impact Factor Calculations

Publication bias refers to the trend of researchers and editors favoring results showing clear-cut significant findings over studies with negative or vague results.

When only ‘positive’ outcomes are published, it leads to skewed data representation in journals, significantly impacting their perceived relevance—a direct influence on their Science Impact Factors. This also portrays an unrealistic image of scientific inquiry where all trials yield major breakthroughs which is quite far from reality. By neglecting the null-filled landscapes we journey through before hitting goldmines; we create a misconceived narrative around what constitutes progressive science.

This systematic suppression limits reproducibility attempts—an essential component for validating scientific findings—and more importantly casts shadows on future research paths.

Also read: Publication Bias: All You Need To Know

Challenges in Ensuring Transparency and Fairness in the Assessment Process

Transparency and fairness are foundational ideals that perhaps each scientific endeavor should strive for. However, when it comes to assessment procedures grounding science impact factors, achieving those becomes a prickly task.

A prime challenge is in achieving a fair distribution of citations. Not all research fields advance at the same pace or have equal audience sizes—some areas witness rapid strides and numerous publications while others may be more specialized with fewer but nonetheless important advancements.

Existing metrics do little to account for these disparities which could marginalize certain fields, despite their utility and importance. While some improvements over time have been observed, changing methods mid-stream can unfortunately breed its own form of bias; it’s like comparing apples with oranges.

Another concern is the Science Impact Factor being used as a standalone quantitative measure without considering other qualitative factors contributing towards overall research credibility and relevance—a slippery slope towards reductionist tendencies cheapening actual merit behind works.

Facing such challenges mandates exploring balanced solutions like blending new comprehensive metrics with traditional ones ensuring we truly value what matters—potent research aiding societal progress.

Current Trends and Future Directions in Science Impact Factor

As often happens in the dynamic scientific landscape, the science impact factor is experiencing changes and adaptations resulting from continuous advancements in research methodologies and publication practices.

Evolution of Impact Factor Metrics and Their Adaptation to New Scientific Fields

Traditionally, the impact factor has played a prominent role in bibliometrics – the field dedicated to analyzing published material. It came into being with print publications at its heart. However, seeing as how we now live squarely within a digitized age, it has become necessary to adjust this tool to better capture the changing tides.

With newer disciplines like data science and computational biology emerging, there’s been an increasing intersectionality of fields which doesn’t lend itself well to traditional subject category assignment within databases that calculate impact factors. This sparked various initiatives to make adjustments for these new areas of study, thereby broadening the scope of what’s considered when calculating impact factors. Coupled with ever-evolving digital tools available for analysis, this trend only signifies our constant strive towards refining accuracy.

The Role of Open Access Publishing in Redefining the Significance of Impact Factor

Following closely behind these changes are alterations brought upon by open access (OA) publishing – another giant leap forward for democratizing knowledge dissemination.

Also read: What Is Open Science and Why is it Important in Research

When OA journals first entered scholarly communication systems, there were debates regarding their quality due to numerous factors like ‘pay-to-publish’ models etc. However, over time many have shown significant growth in their science impact factor ratings – rewarding those producing high-quality research without hidden paywalls.

The rise of OA publications led us further to question exclusive reliance on impact factors while determining a journal’s worth or an article’s influence. Many argue that simply exploring raw citation counts dispensed by search sites like Google Scholar could serve a similar purpose more transparently.

Potential Advancements in Measuring and Evaluating Scientific Impact

Lastly, looking ahead prompts discussions around leveraging artificial intelligence (AI) and machine learning (ML). By employing such technologies, we could potentially automate the process of identifying influential papers more comprehensively than just raw citation counts – therefore rendering a much fairer reflection on research quality.

Moreover, ideas around developing ‘context-dependent impact factors’ have taken shape to counter biases in overall results. For instance, considering ‘field-weighted’ metrics might help iron out inherent discrepancies arising from varying public interest levels across different fields.

Thus, despite the ongoing debates surrounding the science impact factor, it remains an essential tool serving as an indicator of scientific relevance. Yet its future lies in embracing these upcoming advancements for refining its analytical power and perhaps even redefining what ‘impact’ means within the academic community.

Conclusion

Summary of Key Points Discussed Throughout the Content Outline

Throughout this comprehensive analysis, we’ve dived deep into the world of impact factors in science. Let’s recall a few salient points that were thoroughly elucidated through citation analysis. First and foremost, we unpacked what the science impact factor signifies and its historical development. Furthermore, we shed light on how it’s calculated and evaluated.

Getting further into the substance of our essay, we examined multiple high-stakes usage scenarios for science impact factor rankings — from making publishing decisions to influencing resource allocation by grant agencies. Additionally, we acknowledged that while the science impact factor is a significant metric within scientific circles, it does encounter criticism and has its recognized limitations.

Interestingly enough, there are alternative models to assess scientific contributions; each offering unique strengths and weaknesses compared to the traditional science impact factor model. Engaging with these criticisms and alternatives pushed institutions towards adopting comprehensive evaluation systems better suited for assessing research value overall.

Lastly, ethical considerations tied to utilizing such metrics came under our spotlight. With all perks and privileges come incumbent risks of misuse or gaming the system. In turn, this results in publication bias affecting final scores – pointing again at potential limitations inherent in even commonly respected metrics like science impact factor.

Final Thoughts on the Future Implications and Potential Improvements of Science Impact Factor

As we gaze into the future of scholarly research evaluation methodologies like Science Impact Factor (SIF), one thing is certain – change is inevitable. Despite its occasional critique, SIF still forms an integral part of academic appraisal frameworks across several disciplines globally.

However, it’s clear that modern trends are compelling us towards embracing more holistic approaches for judging scientific contributions beyond just citation count or journal prestige. This transformation won’t happen overnight but will require sustained efforts from academicians, publishers and granting bodies alike.

The rise of open-access publishing significantly challenges traditional modes of knowledge dissemination – pushing us to redefine success benchmarks including those associated with science impact factor. Herein, we may find opportunities for potential advancement in measuring and evaluating scientific journal impact factors.

Finally, burgeoning advancements in big data analytics and machine learning propose revisiting how we assess scholarly worth – potentially heralding a new era of research evaluation that’s decidedly more nuanced and insightful. Only time will tell what fruit these seeds of change will bear.

But until then, the incumbent system, while flawed, with the science impact factor at its core, remains our best bet at quantifying academic merit – guiding resource allocation decisions in our collective pursuit of knowledge enhancement. Rest assured knowing the ongoing dialogue within academia is ceaselessly pushing us towards an improved schema truly reflective of a researcher’s contribution to their field.

Enhance the Influence and Reach of Your Academic Papers with the Power of Compelling Visual Communication!

Did you realize that elevating your papers’ impact and visibility is achievable through top-notch infographics? It’s true! With the innovative Mind the Graph infographic tool, you can unlock a whole new level of engagement for your research work. Seamlessly integrate captivating visuals that not only amplify the presentation of your paper but also extend its reach to wider audiences. Ready to revolutionize your academic communication? Don’t miss out – sign up today to harness the full potential of this game-changing tool!

Subscribe to our newsletter

Exclusive high quality content about effective visual

communication in science.