Deep learning is a special part of machine learning that has changed how machines handle complicated information. It’s expert at identifying patterns and connections in data. Because of this, it has led to amazing advancements in computer vision (helping machines see and understand images), natural language processing (helping machines understand and generate human language), and speech recognition. In this article, we’ll look at the main ideas and parts of deep learning. We’ll talk about neural networks, which are like the brains of deep learning systems. We’ll also explain how these systems are trained and how they become better over time. Overall, we want to show you how deep learning is a powerful technology that can change many industries and shape our future.

What Is Deep Learning?

Deep learning is a subfield of machine learning that utilizes artificial neural networks with multiple layers to process and learn from complex data representations. These deep neural networks are capable of autonomously extracting features from raw data, eliminating the need for manual feature engineering. Through a process called backpropagation, the models adjust their internal parameters iteratively to minimize prediction errors and improve their performance over time. Deep learning has achieved remarkable success in tasks such as image recognition, speech recognition, natural language processing, and more, thanks to its ability to handle large datasets, capture intricate patterns, and generalize well to unseen examples.

Still about the question “What is deep learning?”, while deep learning offers powerful capabilities, it does come with some considerations. Training deep learning models requires significant computational resources and large labeled datasets. Additionally, interpreting the inner workings and reasoning of deep learning models can be challenging due to their complex nature. Nevertheless, the advancements in deep learning have revolutionized various fields, driving innovations in artificial intelligence and enabling state-of-the-art results in areas like image classification, object detection, language translation, and speech synthesis. As deep learning continues to evolve, its impact on diverse domains and its potential for solving complex problems is expected to grow, further propelling the frontiers of artificial intelligence.

History Of Deep Learning

The history of deep learning dates back to the early development of artificial neural networks in the 1940s and 1950s. However, significant advancements and breakthroughs in deep learning have occurred more recently. Here is a summarized timeline of the history of deep learning:

1943: Warren McCulloch and Walter Pitts introduce the concept of artificial neural networks, laying the foundation for deep learning.

1956-1980: Early development and initial research on artificial neural networks took place during this period. The perceptron algorithm, a fundamental learning algorithm for single-layer neural networks, was introduced by Frank Rosenblatt in 1957.

1986: The backpropagation algorithm, which enables training of multi-layer neural networks, was rediscovered and popularized by Geoffrey Hinton, David Rumelhart, and Ronald Williams. This breakthrough paved the way for the resurgence of deep learning.

1990s-2000s: Deep learning faced significant challenges due to limitations in computing power and lack of sufficient labeled data. Neural networks with more than a few layers (considered deep) were difficult to train effectively.

2006: Geoffrey Hinton and his team introduced the concept of unsupervised pre training, known as deep belief networks. This technique enabled the initialization of deep neural networks and improved their performance.

2012: AlexNet, a deep convolutional neural network, won the ImageNet competition, significantly advancing the field of computer vision. This breakthrough showcased the power of deep learning and its ability to outperform traditional methods.

2014: Google’s DeepMind developed a deep learning algorithm called Deep Q-Network (DQN), which achieved superhuman performance in playing Atari games. This demonstrated the potential of deep learning in reinforcement learning tasks.

2015: The success of deep learning continued with the introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow and colleagues. GANs revolutionized the field of image generation and opened up new possibilities for creating realistic synthetic data.

2018: Deep learning made significant strides in natural language processing with the introduction of transformer models, particularly the groundbreaking BERT (Bidirectional Encoder Representations from Transformers) model by Google. BERT demonstrated exceptional performance in various language understanding tasks.

Present: Deep learning has become a dominant approach in various fields, including computer vision, natural language processing, speech recognition, and healthcare. The field continues to evolve rapidly, with ongoing research focused on improving model architectures, training techniques, interpretability, and addressing ethical considerations.

Advantages Of Deep Learning

Deep learning offers several advantages that have contributed to its widespread adoption and success in various domains. Here are some key advantages of deep learning:

Ability to Learn Complex Patterns: Deep learning models excel at capturing intricate patterns and relationships within data. With multiple layers of neurons, deep neural networks can learn hierarchical representations, allowing them to extract and understand complex features from raw input data. This capability enables deep learning models to handle diverse and high-dimensional data, such as images, text, and audio, with exceptional accuracy.

Reduced Need for Feature Engineering: Traditional machine learning approaches often require manual feature engineering, where domain experts manually extract relevant features from the data. In contrast, deep learning models can automatically learn and extract useful features from raw data, eliminating the need for extensive manual feature engineering. This reduces human effort, accelerates the development process, and allows for more efficient utilization of data.

Scalability and Adaptability: Deep learning models can handle large-scale datasets efficiently. The availability of powerful GPUs and distributed computing frameworks enables training deep neural networks on massive amounts of data, allowing for scalability and improved performance. Additionally, deep learning models can adapt and generalize well to new, unseen examples, making them robust and suitable for real-world applications.

End-to-end Learning: Deep learning models facilitate end-to-end learning, where the entire system is trained jointly, rather than relying on separate modules or pipelines. This enables the model to learn hierarchical representations and optimize the entire process, leading to improved performance and reduced error propagation across different stages.

Strong Performance in Unstructured Data: Deep learning has demonstrated remarkable success in handling unstructured data types, such as images, text, and speech. Convolutional Neural Networks (CNNs) excel in image analysis tasks, Recurrent Neural Networks (RNNs) are effective for sequential data analysis, and transformer models have achieved outstanding results in natural language processing. Deep learning techniques have pushed the boundaries of performance in these domains, leading to state-of-the-art results.

Continuous Improvement with More Data: Deep learning models often benefit from larger datasets, as they can extract more diverse and representative patterns. With more data, deep learning models tend to improve their performance, unlike some traditional machine learning algorithms that may plateau or overfit with increased data. This characteristic of deep learning makes it suitable for leveraging the ever-growing amounts of available data.

While deep learning offers significant advantages, it’s important to consider computational requirements, the need for large labeled datasets, and potential challenges in model interpretability and explainability. Nonetheless, these advantages have fueled the adoption of deep learning in a wide range of applications, including computer vision, natural language processing, speech recognition, and recommendation systems, among others.

Types Of Deep Learning

Deep learning encompasses various types of learning paradigms. Here are the three main types of deep learning. These three types provide different approaches to training deep neural networks and enable them to learn from data in diverse ways. Each type has its own strengths and applications, and they can also be combined to leverage their complementary benefits in various complex learning scenarios:

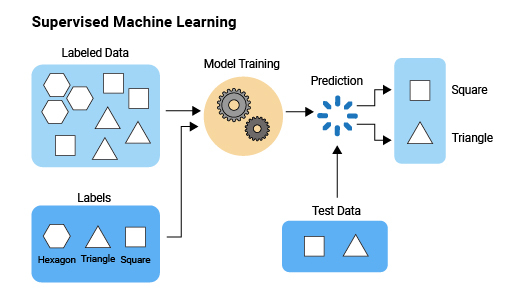

Supervised Learning

Supervised learning is the most common and well-studied type of deep learning. In supervised learning, the model is trained using labeled data, where each input sample is associated with a corresponding target label. The deep neural network learns to map the input data to the desired output labels by minimizing the discrepancy between its predicted outputs and the true labels. Supervised learning is widely used for tasks such as image classification, object detection, speech recognition, and language translation.

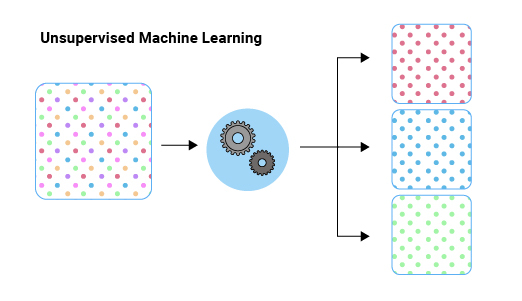

Unsupervised Learning

Unsupervised learning involves training deep learning models on unlabeled data, without explicit target labels. The objective of unsupervised learning is to discover hidden structures, patterns, or representations in the data. Deep neural networks in unsupervised learning can learn from the inherent structure or distribution of the data, such as clustering similar data points or learning latent representations of the input. Unsupervised learning techniques, such as autoencoders and generative adversarial networks (GANs), have been used for tasks like anomaly detection, data compression, and generating synthetic data.

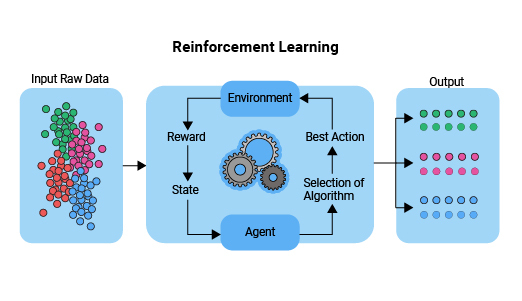

Reinforcement Learning

Reinforcement learning is a type of deep learning that involves an agent interacting with an environment to learn optimal behavior through trial and error. The agent receives feedback in the form of rewards or penalties based on its actions, and the goal is to maximize cumulative rewards over time. Deep reinforcement learning combines deep neural networks with reinforcement learning algorithms, enabling the agent to learn complex strategies and policies. Reinforcement learning has achieved remarkable success in tasks such as playing complex games, robotics control, and autonomous navigation.

Components Of A Deep Learning System

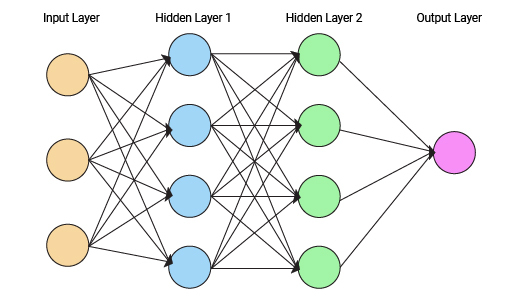

Components of a Deep Learning System refer to the fundamental building blocks that make up a deep learning model or architecture. These components work together to process and learn from data, enabling the system to make predictions, recognize patterns, and perform various tasks. Here are the key components:

Input Layer

The input layer is the initial component of a deep learning network that receives the raw data or input and encodes it in a format that the network can understand. It serves as the interface between the external environment and the network, facilitating the flow of data to the subsequent layers. The size and structure of the input layer correspond to the characteristics of the input data, and its primary function is to initiate the information flow, enabling the network to learn and extract meaningful representations as the data propagates through the hidden layers.

Hidden Layer

Hidden layers are intermediate layers in a deep learning network that process and transform the input data received from the input layer. These layers play a critical role in capturing and learning intricate patterns and representations within the data. Each hidden layer consists of numerous nodes, or artificial neurons, that collectively perform computations on the input data using weighted connections.

The depth and number of hidden layers in a deep learning network provide the capacity to extract increasingly complex and abstract features from the input. As the data passes through these hidden layers, the network learns to recognize hierarchical relationships, detect relevant patterns, and develop a deeper understanding of the underlying data. The hidden layers enable the network to model and analyze data from different perspectives, allowing for more sophisticated and accurate predictions or classifications as the information flows towards the output layer.

Output Layer

The output layer is the final component of a deep learning network that produces the desired output or prediction based on the processed information from the preceding layers. The structure and configuration of the output layer depend on the specific task at hand. For instance, in binary classification tasks, the output layer may consist of a single node that represents the probability or decision of one class versus the other.

In multi-class classification tasks, the output layer may have multiple nodes, with each node representing a different class. The output layer produces the final result or prediction based on the learned representations and patterns extracted from the input data by the hidden layers. It serves as the conclusion or output of the deep learning network, providing the desired output format, whether it is a categorical label, a numerical value, or any other relevant output based on the task being performed.

Neural Network Models

A Neural Network Model is a type of machine learning model inspired by the human brain’s structure and functioning. It consists of interconnected artificial neurons organized into layers, including an input layer, one or more hidden layers, and an output layer. Each neuron receives input data, processes it through weighted connections, applies an activation function, and produces an output. By adjusting the weights and biases during training, the model learns to make predictions, recognize patterns, and perform complex tasks like image and speech recognition, natural language processing, and more. Neural Network Models have proven to be highly effective in the field of deep learning, enabling breakthroughs in various domains and driving advancements in artificial intelligence.

Over 75,000 Accurate Scientific Figures To Boost Your Impact

Mind the Graph platform offers scientists a comprehensive solution to enhance their research impact. With access to over 75,000 precise and visually appealing scientific figures, scientists can effectively communicate their findings and captivate their audience. The platform empowers researchers to create engaging graphical abstracts, scientific illustrations, and presentations, enabling them to convey complex scientific concepts with clarity and precision. By leveraging the vast library of scientifically accurate figures, scientists can elevate their work, increase visibility, and leave a lasting impression on their peers. Sign up for free now.

Subscribe to our newsletter

Exclusive high quality content about effective visual

communication in science.