dager

timer

referat

sekunder

Vitenskapsbloggen Mind The Graph er ment å hjelpe forskere med å lære å kommunisere vitenskap på en ukomplisert måte.

If you’re working on a research article, thesis chapter, grant proposal, or even a class essay, you already know how essential originality is. Journals, universities, and conference committees are more vigilant than ever about plagiarism, and even accidental similarities can slow down publication or raise uncomfortable questions. Plagiarism checkers are popular, but some focus on web‑based matches; others tap into scholarly databases, […]

Brilliant research can lose impact for a surprisingly simple reason: poor visual communication.

Reviewers don’t have time to decode confusing diagrams. Readers won’t struggle through tiny labels and inconsistent layouts. And when your figures don’t communicate clearly, your science loses impact, no matter how strong it is.

Editors and reviewers frequently flag issues such as overcrowded figures, inconsistent formatting, and unclear diagrams during peer review. In fact, guidelines from major publishers like Elsevier and Springer consistently emphasize figure clarity, resolution, and readability as critical submission requirements.

The reality is this: researchers are trained to generate data not necessarily to design visuals. Yet figures are often the first (and sometimes only) part of a paper that readers examine.

The good news? Most research visualization mistakes are easy to fix once you know what to look for. In this article, we are going to break down the most common designing mistakes researchers make and how to correct them quickly.

Multiple panels, tiny labels, dense annotations, long legends, when a figure carries too much information, it becomes difficult to read.

According to research on cognitive load theory (Sweller, 1988), when visuals are cluttered or poorly structured, they increase mental effort, making it harder for readers to interpret information accurately. In academic publishing, this translates to reviewer fatigue and misinterpretation.

The Fix: One Clear Message Per Figure

Each figure should communicate one main idea.

If you’re combining workflow, results, and conclusions in a single visual, consider splitting them. Use whitespace, increase readability, and remove non-essential elements.

Structured templates and scientifically accurate illustrations like those available in Mind the Graph can help you organize complex information without overcrowding your design.

Clear figures don’t oversimplify your research. They amplify it.

Another common mistake researchers make is designing figures where everything looks equally important. Same font size, same color weight, same spacing and no focal point.

When there’s no visual hierarchy, readers don’t know where to look first. Their eyes wander. The main finding gets lost inside supporting details.

Research in information design and visual perception shows that people scan visuals in patterns. If you don’t guide attention intentionally, the brain works harder to prioritize information on its own.

The Fix: Guide the Reader’s Eye

Every figure should have a clear entry point.

Use:

Ask yourself: If someone sees this for five seconds, what stands out first?

Many researchers rely on generic icons, copied images, or simplified graphics pulled from the internet. Sometimes the proportions are wrong. Sometimes biology isn’t accurate. Sometimes the style just doesn’t match the rest of the paper.

It may seem minor, but inaccurate or inconsistent visuals can reduce credibility. In scientific communication, visual precision matters.

The Fix: Use Scientifically Accurate Illustrations

Your figures should reflect the same rigor as your data. Use visuals that are scientifically correct, stylistically consistent, and appropriate for academic publishing. Instead of stretching PowerPoint shapes or repurposing random images, work with illustration libraries built specifically for research.

Mind the Graph offer thousands of peer-reviewed, scientifically accurate illustrations across disciplines, making it easier to create visuals that are both clear and credible.

Different font sizes in every figure, changing color palettes, one diagram in flat design, another in 3D, misaligned panels or uneven spacing; individually, these may seem small. Together, they make your manuscript feel unpolished.

Consistency builds trust. When your figures follow a coherent visual style, they look intentional and professional.

The Fix: Create a Simple Visual System

Decide on:

You don’t need to be a designer. You just need consistency.

You’ve finalized your figure and it looks great on your screen. Then comes submission and suddenly there’s a problem.

Wrong resolution. Incorrect dimensions. RGB instead of CMYK. Text too small. File size too large. Most journals have strict technical requirements for figures, and ignoring them can delay review or lead to immediate revision requests. According to major publishers like Elsevier and Wiley, figure quality and formatting are among the most common technical issues flagged during submission.

The Fix: Design With Submission in Mind

Before finalizing any figure, check:

Designing with these constraints early saves time later. A figure that meets technical standards from the start reduces friction during submission and keeps the focus where it belongs: on your research.

It’s easy to assume your audience knows what you know. So abbreviations go unexplained, complex pathways skip intermediate steps; labels feel “obvious” and legends are minimal.

But not every reviewer is a narrow specialist. And not every reader shares your exact research background. When figures rely too heavily on assumed knowledge, they limit accessibility and potentially reduce citations across disciplines.

The Fix: Design for Clarity, Not Assumption

Ask yourself: Would someone adjacent to my field understand this?

Clear labeling, simple visual grouping, and short explanatory cues make a big difference.The goal isn’t to oversimplify your science. It’s to make it understandable without forcing the reader to decode it.

Figures often become a “final step” task. You finish the experiments, write the manuscript, analyze the data, and then, right before submission, you quickly assemble the visuals.

The result? Rushed layouts, inconsistent formatting, and design decisions made under deadline pressure.

When figures are treated as an afterthought, they rarely reach their full communicative potential.

The Fix: Integrate Visual Thinking Early

Start sketching figures while analyzing results, not after writing the discussion. Early visual planning helps you identify the core message sooner, remove unnecessary complexity, and structure your narrative more clearly.

When figures evolve alongside your research, they become sharper and more intentional.

In research, clarity is influence. You can run rigorous experiments, generate meaningful data, and write a solid manuscript but if your figures are confusing, your message weakens. Reviewers hesitate, and readers move on.

The good part? Most of these visual design mistakes are easy to fix. They don’t require artistic talent; they require intention.

When you treat figures as part of your scientific argument, not as decoration or a last-minute task, you elevate the entire paper. Clear visuals reduce cognitive load, improve understanding, and make your findings easier to remember and cite.

If designing figures still feels overwhelming, you’re not alone. Most researchers were never trained in visual communication. That’s exactly why Mind the Graph exist—to make scientific illustration simpler, faster, and aligned with academic standards.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Ready to end the struggle of wading through endless PDFs, making sense of scattered notes, and dealing with slow progress on literature reviews? Join us for a free webinar led by academic expert Ilya Shabanov, who will share a faster, more structured way to read, analyze, and synthesize research using AI responsibly. You’ll also learn how Paperpal’s new Multi-PDF Chat feature, along with its research and writing tools, helps you craft clear, powerful literature reviews without compromising academic rigor.

Dato: Thursday, February 12, 2026

Time: 1:00 PM EST | 11:30 PM IST

Duration: 1 hour

Language: English

Over this engaging session, you will learn how to:

This session is ideal for anyone looking to streamline literature reviews with responsible AI workflows. You’ll also have the opportunity to get your questions answered live and receive a certification of attendance.

Ilya Shabanov

Founder, The Effortless Academic

Ilya Shabanov is the creator of The Effortless Academic, a platform dedicated to helping academics excel with digital knowledge management, note-taking, and the use of various online tools available to research today (but known by few).

He is not a traditional academic and has spent over 10 years freelancing and co-founding the brain-training company NeuroNation, before switching to academia and starting a PhD in computational ecology. Drawing on his experience with knowledge management as a CEO and an entrepreneurial career, he founded The Effortless Academic as a way to share practical insights, tutorials, and courses designed to make scholarship more effective and less stressful for academics at all stages of their careers.

Don’t miss this chance to level up your literature reading and review process — Register for free today!

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Hyper-realistic AI generated content, fabricated text and images now comprise a considerable chunk of flagged academic submissions. So, the buzz and search for the best AI detectors is hardly surprising. The implications of fabricated studies with synthetic data, hallucinated citations, and cooked up images and visuals are dangerous and far-reaching.

As challenges from new tech arise, so do tailored remedies to restore balance. AI tools have the power to detect plagiarism, image manipulation, fabricated data, and other ethical concerns, including paper mills. Plagiarism checkers and AI checkers are therefore essential for maintaining scholarly standards, especially as submission volumes continue to grow. To avoid the proliferation of deceptive content in scholarly work, educators, researchers, and journal editors will want to use only the most reliable and best AI checkers available. At the same time, users have so many questions: How does an AI detector work? How accurate are AI detectors? And of course, which are the best AI content detectors out there?

The plethora of AI detection tools is sure to give anyone a case of analysis paralysis! Here is a handy table that can help compare the 7 best AI detectors at a glance (Table 1).

Table 1: Best AI Checkers Compared

| Tool | Primary purpose | Target audience | Key features | Claimed accuracy* | Priser |

| Paperpal | Best AI detector for academic integrity | Students, researchers, & publishers | Trained on scholarly text; 3-band probability scale; sentence-level insights | 95%+ (Specific to academic text) | Get started for free (5 scans/day of AI detection. Up to 1,200 words per scan) Free for 7,500 words weekly Prime: $25/month (unlocks access to AI detection, plagiarism, writing, grammar, research and citation, submission readiness checks, and more). |

| GPTZero | Educational & general text detection | Educators, schools, & casual users | “Human-in-the-loop” writing reports, Chrome extension, LMS integration | 99% (on raw AI text) | Gratis (up to 10k words/month) Essential: ($10/month) for 150k words Premium: ($16/month) adds plagiarism scanning |

| ZeroGPT | Lightweight, fast text analysis | Bloggers, Freelance writers, & SEO | DeepAnalyze tech, multilingual support | 98%+ (claimed) | Gratis (up to 15k characters) Proff: $7.99/month for 100k characters per scan and batch processing |

| Copyleaks | Corporate compliance | Enterprise, Law firms, etc. | Detects AI in 30+ languages, advanced data security. | 99.1% | Gratis (trial) Personal plan: $13.99/month for 25,000 words per month |

| Originality. AI | Web content & SEO safety | Content agencies, Web publishers | Real-time fact-checker | 99% (with <1% false positives) | Priser: Pay-as-you-go ($30 for 3,000 credits), Abonnement: $12.95/month for monthly credit allowances |

| Truthscan | Multimodal fraud prevention | Government, large enterprises | Detects AI in images, video, and voice | 99%+ (Forensic level) | Free trial (limited characters) Proff: $49/month |

| SynthID | Watermarking | Developers & media organizations | Detects watermarks at the pixel/token level. | Near 100% (for Google models) | Gratis (for developers/researchers, via waitlist/portal) |

An AI detector is a tool designed to determine whether a piece of content (text, images, video, or voice) was generated by AI or created organically by a human. These tools use sophisticated algorithms to identify certain telltale signs and inconsistencies that are characteristic of synthetic generation.

The main goal of such tools is to ensure authenticity and uphold integrity. In academic research, reliable AI detectors can verify manuscripts, theses, and data visuals for originality before peer review or publication, helping editors flag fabricated content while minimizing false positives.

Text detectors analyze writing style through mathematical metrics to see if it mirrors the predictable nature of LLMs. One is a measure of how predictable the wording is. AI models are designed to “predict” the next most likely word, often resulting in text that has high predictability. The second measure is “burstiness” or variation in sentence length and structure. Natural human writing is “bursty,” with a mix of long, complex sentences and short ones (think one- or two-word sentences!). AI-generated text tends to have sentences of uniform length with consistent flow. In fact, if you read AI-generated text aloud, it seems to drone on flatly.

How AI image detectors work is that they identify statistical patterns and visual inconsistencies that distinguish artificial content from human-created work. Image detectors use sophisticated algorithms to find “telltale signs” of synthetic generation that are often invisible to the human eye, e.g., pixel anomalies in visuals and lack of “noise.” Such tool are also good at “clone detection” to identify whether parts of an image have been duplicated from other online sources.

AI text checkers are highly accurate (95%–99% accuracy) at detecting pure AI text or “lazy” AI use (in other words, copy-pasted AI text). With human edited or hybrid text, however, detection accuracy drops to 80%–90%. When text is written by non-native English speakers, again, the reliability is lower. This is because non-native writing often follows simple, “predictable” patterns, i.e., with low perplexity and burstiness, appearing to AI detectors as machine logic.

AI detectors flag text that follows a highly logical, structured pattern—qualities often found in certain types of human writing. In 2023, when researchers ran the U.S. Constitution through popular detectors like GPTZero and ZeroGPT, it was flagged as 92% to 97% AI-generated! Excerpts from the Bible have frequently returned high AI scores, often exceeding 88%. What’s more, many professional content writers have found their work written well before ChatGPT came to the scene flagged as AI! The reasons for these include an overlap in training data, formal structure (with naturally lower perplexity and burstiness).

The best AI checkers like Paperpal use perplexity and burstiness metrics, hitting high (99%+) accuracy on benchmarks like “human-ChatGPT comparison corpus” or HC3. However, they cannot identify intellectual ownership or original ideas; they only assess the final output. Finally, because AI generators are constantly evolving, AI detectors must be frequently retrained to stay effective against newer models. Nevertheless, it is worth noting that Paperpal’s cross-verifying models are retrained every 90 days to ensure accuracy, reducing false positives by over 40%.

You Might Like Reading: Why Paperpal Built Its Academic AI Detector (And Why Binary Scores Don’t Tell the Full Story)

With AI content detection, things are not really black and white. In 2026, the market for AI detection has shifted from simple “human vs. machine” scores to sophisticated and specialized tools. Here are the 7 most accurate detectors for content and images, categorized by their specific strengths.

Unlike general AI detectors, Paperpal AI Deetector doesn’t provide a blanket document score. Instead, it gives a contextual view of how AI may have influenced a piece of text, with sentence-level scoring to distinguish between AI written, AI-human blend, and human written text. Since Paperpal understands academic writing patterns, it doesn’t flag structured text, as often found in academic writing and tops the best AI detectors for academic content.

GPTZero.me is highly sensitive to ChatGPT and Claude outputs, making it a reliable text specialist. Its perplexity-based scans flag pure GPT outputs with a high accuracy of 98%, but it falters when it comes to hybrid text (82% accuracy).

ZeroGPT is a simple, no-frills AI detector for instant scans, detecting 90% unedited AI text with zero signup, making it good for quick, preliminary checks. It is known for its aggressive DeepAnalyze algorithm.

Copyleaks is highly accurate for non-English text (30+ languages) and source code. It even integrates directly into platforms such as Canvas and learning management systems (LMSs) such as Moodle.

Originality.AI includes a “Fact Checker” and “Readability” score alongside AI detection, allowing it to check for fabricated claims. It also detects content from latest models such as GPT-4o and Gemini Pro.

This multimodal detector is designed to spot “deep” synthetic content. It is one of the few platforms that detects AI in images, voice, and video in addition to text. A veritable deepfake catcher, Truthscan is one of the best AI detection tools for fraud prevention and media verification.

SynthID (by Google DeepMind) looks for invisible digital watermarks embedded at the pixel or token level by Google’s own models (Gemini, Imagen, Veo). It is now available via a dedicated portal.

Not all AI detectors are built the same and choosing the right one depends on what you’re checking. Whether it’s a research manuscript, a conference poster, or a set of figures, the type of content you’re working with determines which AI detection tool will serve you best.

For text-heavy academic work, you need an AI detector that understands the nuances of scholarly writing and doesn’t penalize legitimate editing. Paperpal emerges as the premier choice among the most cost-effective and reliable AI detectors for academic writing as it is tailored for academic rigor with its three-tier scoring (human, blended, AI), which minimizes false positives on edited drafts.

For visuals, you need tools that can flag AI-generated images slipping into figures and presentations. AI checkers like SynthID can help here.

AI detection tools are great at detecting raw, unedited LLM outputs. However, these cannot be used as the sole evidence to rate a scholar’s work. A human review must complement this check so that legitimately edited or paraphrased content isn’t unfairly flagged.

Most journals now require that the use of LLMs be acknowledged. It is important to disclose AI use to uphold the integrity of the scientific record. Transparency allows readers to understand which parts of a study were synthesized by AI and which were the result of human analysis. By using reliable AI detectors such as Paperpal, authors can ensure their work remains compliant with these evolving standards.

While GPTZero and Originality.ai are popular for general use, Paperpal has emerged as a top choice among best AI writing detectors for students and publishers. Unlike generic tools, Paperpal is specifically trained on millions of scholarly manuscripts, making it better at distinguishing between academic formality and machine-generated patterns.

Yes, frequently. Even the best AI content detectors report false positive rates, with higher spikes for content by non-native English speakers. While most checkers flag “ChatGPT-style” predictability, Paperpal works differently by using a three-band probability scale and sentence-level insights, helping you defend your original work rather than just providing a binary “human or AI” score.

AI image detectors identify visuals created by models like Midjourney or DALL-E. They work by conducting forensic analysis at the pixel level, looking for “digital fingerprints” such as unnatural noise patterns, lighting inconsistencies, or regularities that don’t occur in organic photography.

While plagiarism checkers compare your text against a database of existing work (to see if you copied someone else’s words), AI detectors analyze your writing style (predictability and rhythm) to predict if the words were generated by a machine, even if the content is technically “unique.”

At present, no detector is 100% accurate, including the best AI checkers. AI detectors should therefore be used as a starting point rather than the sole basis for disciplinary action. At best, AI scores can be treated as “smoke signals” that could encourage a student to disclose the extent of their AI use and/or review their drafts.

Several tools offer limited free scans; however, for those in research or academia, Paperpal provides one of the most accurate AI detectors that are free, allowing for 5 daily checks with a generous word limit, specifically tailored to handle complex, technical writing.

Simple spelling and punctuation fixes usually will not trigger a flag. However, using generative AI features (like “Rewrite this paragraph: or “Change tone to professional”) could increase the risk of a false positive.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Thousands of new papers appear daily across disciplines, each competing for a few seconds of attention from editors, reviewers, and readers. Traditional abstracts, no matter how well written, are often skimmed or skipped entirely.

A single, well-designed image is now becoming the first read of a research paper and journals are preferring papers with graphical abstracts. A 2016 study found that 47% of social science journals had already published at least one article with a graphical abstract.

A visual abstract is a concise, visual summary of a research paper that highlights its core message at a glance. It uses a combination of icons, illustrations, short labels, and structured layouts to communicate the study’s objective.

The practice of journals encouraging, and in some cases mandating visual abstracts is expected to grow in the coming years. As more publishers adopt visual summaries as part of the submission process, it becomes important for researchers to get comfortable with simple and effective design approaches.

Looking to make your research more visible and accessible? With Mind the Graph, researchers can create clear, publication-ready visual abstracts using scientifically accurate illustrations without any design experience.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

As AI becomes increasingly embedded in research and scholarly workflows, trust, transparency, and strong governance are more critical than ever. Cactus Communications (CACTUS), a leading technology company delivering AI-powered and expert-led solutions for the scholarly publishing ecosystem, has achieved ISO/IEC 42001:2023 certification for its Artificial Intelligence (AI) Management System, placing it among the early global adopters of this international standard. The certification confirms that CACTUS has established, implemented, and continues to maintain a robust AI governance framework aligned with global best practices.

ISO/IEC 42001:2023 sets an international benchmark for the responsible design, deployment, and management of AI systems. The standard emphasizes essential principles such as fairness, reliability, risk management, system oversight, and lifecycle governance, areas that are becoming increasingly vital as AI adoption accelerates across enterprises, institutions, and academia.

The certification applies across all CACTUS brands and solutions, including Paperpal, the AI writing and research assistant trusted by more than three million researchers worldwide. It validates that CACTUS operates a comprehensive, organization-wide AI governance framework aligned with international best practices and evolving regulatory expectations.

CACTUS’ AI Management System spans both expert services and AI-enabled product development, covering governance, legal, risk and compliance, responsible AI, engineering, product, and design functions. All processes operate in line with the controls defined under the ISO/IEC 42001:2023 Statement of Applicability, ensuring consistent oversight across the entire AI lifecycle from design and development to deployment and ongoing monitoring.

For researchers, institutions, and enterprise partners, this certification provides meaningful reassurance. It demonstrates that Paperpal’s AI is developed with strong safeguards for transparency, accountability, and risk management, while enabling continuous improvement as AI technologies and expectations continue to evolve.

Akhilesh Ayer, CEO, Cactus Communications, said, “Achieving ISO/IEC 42001:2023 reflects our commitment to building AI systems that stakeholders across academia, publishing, and research can trust. It reinforces our focus on delivering secure, accountable, and responsibly developed AI that users can rely on with confidence.”

“ISO/IEC 42001:2023 validates the discipline and safeguards that underpin our AI solutions—from how we govern data to how we evaluate, test, and monitor our models. As AI becomes increasingly central to research workflows, this certification assures our users and partners that our AI tools are built on strong foundations of safety, reliability, and responsible innovation,” Ayer added.

As AI continues to shape how research is written, reviewed, and shared, Paperpal’s ISO/IEC 42001:2023 certification further strengthens its position as one of the most rigorously governed AI writing tools available—built on a foundation of responsible innovation, safety, and trust.

Whether you’re preparing a manuscript, designing a conference poster, or sharing results on social platforms, the clarity and impact of your visuals influence how your work is perceived.

For many early-career researchers and PhD students, the challenge lies in converting complex data into clean, engaging graphics, especially without dedicated design skills or extra time. That’s where Mind the Graph steps in: a platform designed from the ground up for scientists, to turn your visual communication from “nice to have” into a strategic advantage.

Articles with well-designed graphical abstracts or infographics often receive higher citations and stronger engagement. For a researcher or PhD student, this means a better chance their work gets noticed, remembered, and shared. However, templating, layout decisions, and scientific illustration sourcing often demand time and skill that you might prefer to spend on the science itself. Mind the Graph addresses that gap.

If you’re a researcher or PhD student serious about sharing your work effectively, Mind the Graph offers a powerful yet approachable solution. Sign up to Mind the Graph and explore the library, pick a template for your next project, customise it, and download it. Your science deserves to look as good as it is!

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Report writing involves creating documents to communicate with readers about specific topics and to present facts, findings, and recommendations for research. Report writing is commonly used in academic, research, and technical settings. A well-structured report is important to ensure clarity and accuracy and effective communication of the findings.

In academia, report writing is essential for documenting details about research experiments, data analysis, and providing recommendations. Effective report writing requires clear, professional language and accurate information. Reports may need to be written in specific formats based on the guidelines provided and also need to be customized keeping the target audience in mind. For example, a budgetary report may provide the annual expenditures of the marketing department of a company and offer recommendations on how the expenditure can be reduced.1

This article provides details and steps about report writing and also shares tips on how to create well-structured reports.

Report writing is the process of presenting detailed information about a subject in a clear and accurate way using plain language. Report writing helps in communicating facts, ideas, research methods, recommendations, etc. with readers. Reports are useful across all industries such as academics, science, government, and business.

Report writing follows a specific structure, mostly including sections such as the Introduction, Methodology, Results, Discussion, and sometimes a separate Conclusion (IMRaD) to give readers a complete understanding of the subject. Reports can be of different lengths depending on the type of report, requirement, and target audience.

A good report has the following characteristics:

The following table describes the differences between reports and essays.2,3

| Characteristic | Report | Essay |

| Table of contents | Present | Absent |

| Sections/structure | Divided into headed and numbered sections, following the IMRaD structure | No sections, only paragraphs |

| Metode | Presents data that you have collected yourself through surveys and experiments. Includes description of the methodology. | Usually analyzes or evaluates theories and past research. Does not refer to the methods used. |

| Visual aids | Contains tables, charts, and diagrams | Does not include these elements |

| Recommendations | May be present | Not present |

| Overall tone | Objective, formal, informative, fact-based | Analytical, critical, argumentative, idea-based |

The following table lists a few different types of report writing and also provides examples from the educational setting.2

| Type of report writing | Formål | Example in education |

| Informativ | Present facts objectively without analysis or opinion. Provide a summary of information and data on a particular topic. | Report on school library usage trends over the past academic year |

| Analytisk | Analyze data and provide interpretation or recommendations | Report analyzing the impact of blended learning on student performance |

| Forskning | Document the process, data, and findings of a research study | Research report on student attention spans during online vs. offline classes |

| Progress | Update stakeholders on the current status of a project | Monthly report tracking the rollout of digital whiteboards in classrooms |

| Technical | Explain technical details, systems, or procedures | Guide explaining how to use a Learning Management System like Moodle |

| Field | Describe observations made in the field | Trainee teacher’s report on student behavior during classroom observation |

| Proposal | Propose a plan or solution with reasons and benefits | Proposal suggesting a new elective course on financial literacy for high schoolers |

Report writing is an organized and structured process. Here are some of the main steps you could use to write your report.2

1. Identify the objective of the report and the target audience

Before writing a report, you need to know the target audience for whom you are writing your report. The audience could be the general public, academicians, government, or any other specific group. The report should be written keeping your audience’s level of expertise and requirements in mind and including information that would be useful for them.

2. Conduct research to gather data

Use appropriate sources to collect data for your research. Ensure that the sources are credible and authentic and provide accurate data. These sources should be cited correctly in both the text at the corresponding instances and also in the reference list at the end of the report.

3. Create a structure to organize your report

Although most reports have a common structure, make sure to plan and customize your sections and headings based on the information you have and which you need to present to the audience. You could use the typical sections such as Title page, Table of contents, Introduction, Methodology, Findings, Discussion, Conclusion, and References.

4. Write your report

Write each section of your report and ensure that you include all relevant information. For example, the Introduction should have the objective and background information to set the context to help readers understand your report clearly. In the Results section, use visual aids such as graphs, tables, etc., which help in clearly representing data. In the conclusion, summarize your research, note any implications and potential for further research, and also offer recommendations.

5. Proofread your report

Ensure that the language used is formal and free of any grammatical and typographical errors. Paperpal is an excellent tool to ensure consistent formatting and correct referencing. In addition, you could run Paperpal’s Plagiarism Check to ensure originality in your writing.

Report writing has a few essential elements and a structure typically common to most reports. The common report writing elements are listed below, along with few examples:2

1. Tittelside: Title, author name, institution, date

Baral, P., Larsen, M., & Archer, M. (2019). Does money grow on trees? Restoration financing in Southeast Asia. Atlantic Council. https://www.atlanticcouncil.org/in-depth-research-reports/report/does-money-grow-on-trees-restoring-financing-in-southeast-asia/

2. Table of contents: Provides an overview of the report’s contents and lists entries using section headings and page numbers for easy retrieval.

3. Executive summary (or Abstract): This is a concise overview of the entire report, summarizing only the key aspects.

Example: In 2024, the annual sales report for ABC Ltd. showed a 30% increase in overall sales compared to the previous year. Since majority of the customers were women aged 25 to 40 years, marketing efforts are recommended to be focused on this demographic in the next year.

4. Innledning: Provides the context and background of the research.

Eksempel: The inclusion of experiential and competency-based learning has benefited electronics engineering education. Industry partnerships provide an excellent alternative for students wanting to engage in solving real-world challenges. Industry-academia participation has grown in recent years due to the need for skilled engineers with practical training and specialized expertise.

However, very few studies have considered using educational research methods for performance evaluation of competency-based higher engineering education. To remedy the current need for evaluating competencies in STEM fields and providing sustainable development goals in engineering education, in this study, a comparison was drawn between study groups without and with industry partners.

5. Metode: Outlines the methods used for data collection, such as surveys, interviews, or experiments.

Eksempel: The study randomly allocated the 350 resultant patients to the treatment or control groups, which included life skills development and career training in an in-house workshop setting. The 350 participants were assessed upon admission and again after they reached the 90-day employment requirement. The psychological functioning and self-esteem assessments revealed considerable evidence of the impact of treatment on both measures, including results that contradicted our original premise.

6. Results/Discussion/Analysis: Presents, discusses, and analyzes the key findings and data

Eksempel: A total of 5365 snakebites were reported to the CPCS from 1 September 1997 through 30 September 2017. All bites were reported from rattlesnakes. The majority of snakebite reports were called from health care facilities (4607, 85.9%) versus private residences (671, 12.6%), with the distribution of number of cases per county and incidence (number of cases per 1 million residents) shown in Figures 1 and 2. […] Males were significantly more likely than females to be injured (76.6%, 95% CI ¼ 75.6–77.9%, p < .01) […]

7. Konklusjon: Summarizes the main points, reiterates the report’s purpose, and suggests implications of the study.

Eksempel: In conclusion, our study has shown that increased usage of social media is significantly associated with higher levels of anxiety and depression among adolescents. These findings highlight the importance of understanding the complex relationship between social media and mental health to develop effective interventions and support systems for this vulnerable population.

8. Recommendations: Offers feasible suggestions for improvements in future research.

Eksempel: E-learning platform developers should focus on e-learning platforms that are interactive and cater to different learning styles. They can also invest in features that promote learner autonomy and self-directed learning.

Future researchers can further explore the long-term effects of e-learning on language acquisition to provide insights into whether e-learning can support sustained language development.

9. Referanser: Cites all sources referred to in the research.

10. Vedlegg: Includes any supporting or additional information.

Report writing should use clear and concise language. The sentences are usually structured and use a formal tone and the paragraphs are typically shorter than those in an essay.

Here are a few points to keep in mind:4

Tips for Creating Strong Reports

Listed below are a few tips to help you in strong report writing:

To summarize, report writing involves writing a structured and organized document with clear sections and headings using formal and transparent language. The purpose of report writing is to clearly communicate required details about a topic to a target audience and include recommendations for future research.

A report that uses credible sources, which are all correctly cited in the paper helps prove to readers that the report is authoritative and trustworthy. A well-structured report that uses data effectively and also uses formal language also helps readers gain trust.

Consult any guidelines you have been provided with to see if any specific referencing format is required. Ensure that you are citing the sources at the appropriate place in the text and also in the reference list.

Although report writing is relatively simple because of the well-defined structure that needs to be adhered to, even experienced report writers may inadvertently make mistakes. Some common errors that could be avoided in report writing include the following:6

1. Unclear or vague objectives

In report writing, it is essential to ensure that the objective is clearly stated to help set the context for the readers.

2. Insufficient research

Absence of the necessary amount of correct data because of inadequate research can affect the credibility of the research as the arguments may not be convincing enough for the readers.

3. Poor organization and structure

An unstructured report can be difficult to comprehend for readers. Clear headings and paragraphs are useful in enhancing clarity. In a report, the information should be coherent with a logical flow.

4. Unclear communication of findings

Although reports include findings of the study, these data may not be discussed in much detail. The significance of the findings and how they connect with the objectives should be clearly explained.

5. Avoiding proofreading the final report

However well a researcher writes a report, some grammatical and typographical errors may creep in. Proofreading the entire report before final submission can help eliminate such errors.

6. Ignoring the target audience

Report writing is sometimes exclusively written for a specific audience and should consequently be adapted considering their level of expertise. A report full of technical jargon for a relatively less-experienced audience may not have the intended effect. Therefore, language and level of detail should be adapted and customized based on the target audience.

Thus, report writing is an essential means for researchers or authors to communicate the details of their work with their target audience and therefore should be written carefully in a logical structure.

We hope that this article has helped you understand how reports can be an effective tool for communicating details about a specific subject and also to help readers understand the implications and scope for further research, in addition to providing recommendations for improving the research.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Referanser

1. Report writing: A step-by-step guide for professionals. Indeed. Updated November 27, 2025. Accessed December 11, 2025. https://uk.indeed.com/career-advice/career-development/report-writing

2. What is report writing: Format, types, and examples. Paperpal blog. Published July 16, 2025. Accessed December 12, 2025. https://paperpal.com/blog/academic-writing-guides/what-is-report-writing-format-types-examples

3. Reports and essays: Key differences. University of Portsmouth. Accessed December 17, 2025. https://www.port.ac.uk/sites/default/files/2022-10/Reports%20and%20Essays%20key%20differences.pdf

4. Report writing. Sheffield Hallam University. Accessed December 22, 2025. https://libguides.shu.ac.uk/reportwriting/language

5. Types of reports. LibreTexts. Accessed December 23, 2025. https://biz.libretexts.org/Courses/Chabot_College/Mastering_Business_Communication/06%3A_Business_Reports/6.01%3A_Types_of_Reports

6. Common mistakes in report writing: A guide to avoiding pitfalls. Reportql vis Linkedin. Published November 29, 2023. Accessed December 24, 2025. https://www.linkedin.com/pulse/common-mistakes-report-writing-guide-avoiding-pitfalls-%C3%A7elik-ksssf/

Scientific illustration has become a cornerstone of modern research communication. Clear, well-designed visuals help researchers explain complex concepts, improve comprehension, and present findings more effectively in journals, posters, and conferences. Studies show that academic papers incorporating more diagrams and visual content typically achieve higher impact than those relying on text alone. For researchers, PhD students, and broader academic professionals, choosing the right scientific illustration tool can make the difference between a figure that merely looks good and one that simplifies complex data and clearly supports the research narrative while meeting stringent publication standards.

Whether you are creating a graphical abstract, a conference poster, or high-resolution figures for peer-reviewed journals, several factors matter when selecting the best scientific illustration tool. These include access to accurate scientific visuals and icons, vector-based export formats, ease of use, ready-made templates, customization options, and compatibility with existing research workflows.

In this blog, we compare the five best scientific illustration software tools: Mind the Graph, BioRender, Adobe Illustrator, Affinity Designer, and Inkscape, to help you choose the right solution for your research and visualization needs.

| Feature | Mind the Graph | BioRender | Adobe Illustrator | Affinity Designer | Inkscape |

| Ready-to-use scientific templates | ✅ | ✅ | ❌ | ❌ | ❌ |

| Specialized scientific icon library | ✅ | ✅ | ❌ | ❌ | ❌ |

| Graphical abstract support | ✅ | ✅ | ❌ | ❌ | ❌ |

| Scientific poster templates | ✅ | ✅ | ❌ | ❌ | ❌ |

| Drag-and-drop simplicity | ✅ | ✅ | ❌ | ❌ | ❌ |

| Cloud-based platform | ✅ | ✅ | ❌ | ❌ | ❌ |

| Team collaboration options | ✅ | ✅ | ❌ | ❌ | ❌ |

| Beginner-friendly learning curve | ✅ | ✅ | ❌ | ❌ | ❌ |

| Auto-alignment & visual formatting tools | ✅ | ✅ | ❌ | ❌ | ❌ |

| Freemium access for students | ✅ | ✅* | ❌ | ❌ | ✅ |

| Journal-ready export quality (PDF/PNG) | ✅ | ✅ | ✅ | ✅ | ✅ |

| Advanced image editing for custom illustrations | ✅ | ❌ | ✅ | ✅ | ✅ |

*Free plan available with export limitations.

Mind the Graph is an easy-to-use scientific illustration software designed specifically for researchers, PhD students, and academic teams who need clean, accurate, and publication-ready visuals quickly. As an online scientific illustration program, it is fully browser-based and intuitive, making it ideal for academics without any formal design experience.

The platform offers an extensive library of professionally created scientific illustrations spanning 80+ research fields, including biology, medicine, chemistry, life sciences, and environmental sciences. It also offers 300+ ready-made templates for graphical abstracts, scientific posters, presentations, and infographics.

Mind the Graph: Key Features

Pros of Mind the Graph

Cons of Mind the Graph

BioRender is one of the most widely used science illustration software tools in the life sciences. It is especially popular among biology and biomedical researchers, who need polished, publication-ready figures without designing illustrations from scratch.

Although not as flexible as Adobe Illustrator for complex vector editing, BioRender is a great platform to help researchers create key pathway diagrams, experimental workflows, and research figures that maintain consistent visual standards, making it a popular choice among labs and academic teams.

BioRender: Key Features

Pros of BioRender

Cons of BioRender

Adobe Illustrator is a powerful vector graphics tool widely used for creating highly customized scientific figures, especially when researchers need full control over shapes, layers, typography, and precise adjustments for publication. Many academics use Adobe Illustrator for science visualization, using it to create complex diagrams, multi-panel figures, or fine-tuned edits for journal publication. This flexibility makes it popular among researchers looking for reliable scientific poster templates; Adobe Illustrator can help to quickly craft posters and conference visuals based on submission guidelines.

Adobe Illustrator: Key Features

Pros of Adobe Illustrator

Cons of Adobe Illustrator

Affinity Designer is a powerful alternative to Illustrator for researchers who want advanced vector editing without paying for a subscription. Although it isn’t specifically built as scientific illustration software, it is frequently used by academics to produce precise, scalable diagrams for publication, scientific posters, and conference presentations. Its smooth workflow, offline availability, and flexible vector-raster workspace make it a strong option for researchers comfortable with design tools.

Affinity Designer: Key Features

Pros of Affinity Designer

Cons of Affinity Designer

Inkscape is a free, open-source vector editor that is widely used by academic looking for full control over research visuals. It is often chosen by researchers looking for a reliable scientific illustration software free of cost. While it isn’t designed specifically to be a scientific illustration software, it can help with creating scalable diagrams, editing vector artwork, and producing figures suitable for journals and conferences.

Inkscape: Key Features

Pros of Inkscape

Cons of Inkscape

After comparing the leading tools, Mind the Graph stands out as the best scientific illustration software for most researchers because it is built specifically for scientific communication, not general design. It is ideal for researchers, PhD scholars, and academic teams who need quick publication-ready visuals without spending days learning complex design tools.

Mind the Graph goes beyond what other tools offer to better address researcher needs. By eliminating the friction between scientific thinking and visual communication, it empowers researchers to focus on the content of their work, while still producing accurate figures and illustrations that meet the clarity and quality expected by leading journals and conferences.

Choosing the best scientific illustration tool depends on your research workflow, design experience, and the type of visuals you create. Template-driven platforms like Mind the Graph and BioRender are ideal for quick, accurate figures with minimal effort. Advanced vector tools such as Adobe Illustrator, Affinity Designer, and Inkscape are better suited for highly customized or complex illustrations.

Consider whether you need an online platform or offline software, built-in scientific icon libraries, collaboration features, and how much time you can invest in learning design tools. Ultimately, the right choice is the one that helps you create clear, journal-ready visuals efficiently and accurately.

If you want to simplify the process of creating scientific illustrations and enhance the impact of your research visuals, sign up to Mind the Graph and explore its scientific templates and vast illustration library. It’s one of the easiest ways to elevate your posters, graphical abstracts, and publications so you can communicate your findings effectively and regain time to focus on your research.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Data visualization is the graphical representation of data and is used for understanding and communication, including common graphics such as pie charts and plots. Data visualization helps convey complex data relationships in an easy-to-understand manner, making it a critical part of research. For example, if numerical data in a table requires considerable effort to conceptualize a trend, plotting the data visually makes the trend immediately clear to the reader.

The way information is visually presented can influence human perception. Consider the pie chart, a visual representation designed to display how individual components contribute to a whole. It offers a simple way to organize data, allowing users to easily compare the size of each component with the others. Therefore, if a researcher needs to visualize proportions or relative contributions within a single category, a pie chart serves as a straightforward visualization tool.

Let’s explore how to make pie charts, common pie chart uses, pie chart examples, and more!

A simple pie chart definition is “a circular statistical graphic that is divided into radial slices to illustrate numerical proportion.” In this chart type, each categorical value corresponds to a single slice, and the size of that slice—measured by its area, arc length, and central angle—is designed to be proportional to the quantity it represents. Thus the main strength of the pie chart lies in how it helps convey data at a glance by instantly communicating the part-to-whole relationship.

Did you know that the pioneer of data visualization, Florence Nightingale, used an adaptation of the pie chart, the polar area diagram or rose diagram as early as in 1858? She used this diagram to illustrate seasonal sources of patient mortality in military hospitals during the Crimean War, demonstrating that deaths due to disease (represented by a large segment) far outweighed deaths caused by wounds or other factors.

Pie chart uses are limited to visualizing proportions where the primary objective is to show each group’s contribution relative to the total. Pie charts are especially useful when highlighting a particular slice whose proportion is close to a common fraction like 25% or 50%. They are highly effective in scenarios where simplicity and quick comprehension are paramount and where the chart needs to be compact. Note that pie charts are inappropriate for non-proportional data (data exceeding 100%) or when the goal is to compare groups to each other, rather than to the whole.

In modern social science, pie charts or effective alternatives are employed for data where the components must sum to 100%. Relevant pie chart examples include distribution of aggregate income, population demographics, and election results.

To handle complex data, researchers may turn to pie chart subtypes. For instance, treemaps can break down cumulative global CO2 emissions by country, and sunburst diagrams may be used for visualizing hierarchical data, such as an employee directory of a company divided by country and department, where the size of the segment represents the number of employees.

How to Calculate a Pie Chart Formula

To create a pie chart, you need to first know the pie chart formula. The steps to calculate it are as follows:

1. Add up the individual numbers to calculate the total number.

2. Divide 360 (the total number of degrees in the pie chart) by the total number. The resulting value will tell you the angle, in degrees, that each category equates to in terms of a pie chart.

3. Multiply each frequency by this angle value to get the angle for each segment of the pie chart. Remember, the segment angles should add up to 360°.

Put simply, to calculate the angle of each slice of a pie chart, the pie chart formula is as follows:

Segment angle = (360/total frequency) x category frequency

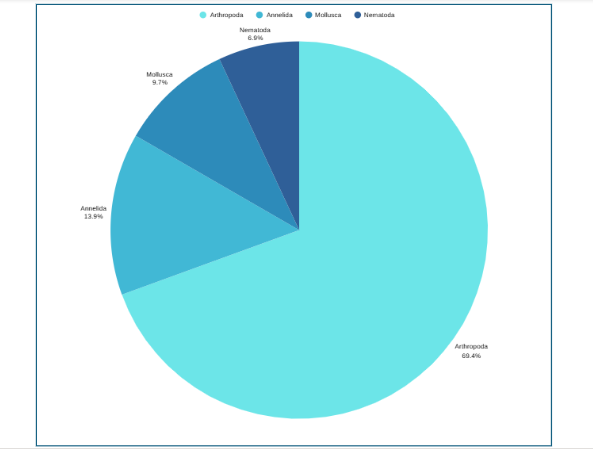

Now that you have understood how to calculate the pie chart formula, the next question is how to draw a pie chart? Suppose an ecologist has been tasked with understanding species distribution of various animal phyla in a forest understory. Here are the steps she will follow:

Trinn 1: Collect the data

Trinn 2: Tabulate the data:

| Animal phyla | Number of species |

| Arthropoda | 50 |

| Mollusca | 7 |

| Annelida | 10 |

| Nematoda | 5 |

The data above can be represented by a pie chart as following and by using the circle graph formula, i.e. the pie chart formula explained above.

Trinn 3: Add up the individual numbers to calculate the total number. In this case: 50 + 7 + 10 + 5 = 72.

Trinn 4: Divide 360 (the total number of degrees in the pie chart) by the total number. In this case: 360 ÷ 72 = 5, so each species is equal to 5° in the pie chart.

Trinn 5: Multiply each frequency by this angle value to get the angle for each segment of the pie chart:

| Animal phyla | Number of species | Angle |

| Arthropoda | 50 | 50 × 5° = 250° |

| Mollusca | 7 | 7 × 5° = 35° |

| Annelida | 10 | 10 × 5° = 50° |

| Nematoda | 5 | 5 × 5° = 25° |

Trinn 6: Draw a circle and use a protractor to measure the degree of each sector (see Figure 1). You can also use online pie chart makers as well.

Trinn 7: Label the sections and provide annotations and a legend.

Pie Chart Examples

Beyond the basic standard pie chart, there are several variants based on the circular concept, which are often used as alternatives for specific visualization needs.

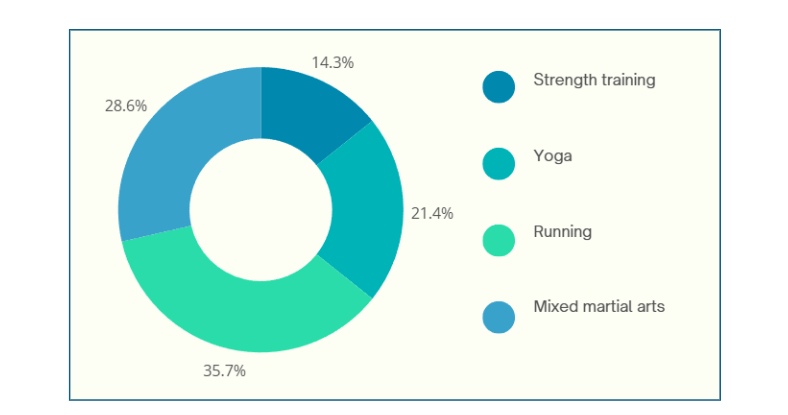

1. Donut chart: This is a variant of the pie chart with a hole in the center. Its primary purpose is to visualize data as a percentage of the whole (Figure 2).

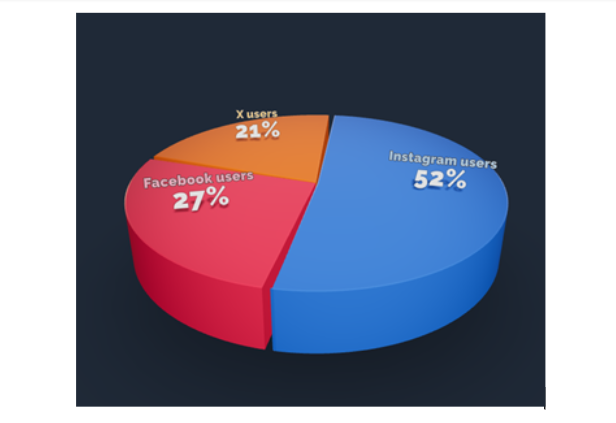

2. 3D Pie chart: This variant gives an aesthetic, three-dimensional look (see Figure 3). However, the use of perspective distorts the proportional encoding of the data, making these plots difficult to interpret accurately.

3. Exploded pie chart: This chart features one or more sectors “pulled out” from the rest of the disk to highlight a specific sector for emphasis.

4. Square chart (waffle chart): This chart uses color-coded squares, typically arranged in a 10 × 10 grid, where each cell represents 1%, instead of a circle, to represent proportions.

5. Polar area diagram (Nightingale diagram/rose diagram): This chart is similar to a pie chart, but the sectors all have equal angles and differ in how far they extend from the center (radius).

6. Treemaps: This is a “square” version of the pie chart that uses a rectangular area divided into sections; the area of the sections is proportional to the corresponding value. Treemaps can handle hierarchies, and visualize many more categories than a traditional pie chart.

When designing pie charts, careful consideration must be given to their specific limitations to ensure accurate and effective communication of data.

Dos when creating a pie chart

Don’ts when creating a pie chart

Pie charts have a specific, narrow use case, which contributes both to their enduring popularity and the intense criticism from data visualization experts.

Pie chart advantages

Pie chart disadvantages

Pie charts are a simple yet powerful tool for visually representing how each component contributes to a whole, making them invaluable for part‑to‑whole comparisons in research papers. They excel in quick, intuitive communication of proportion and are most effective when used with a suitable pie chart maker and an accurate pie chart formula that ensures all segments sum to 100%. However, they are limited to scenarios where data segments represent parts of a single whole; therefore, researchers should use them sparingly, ideally with five or fewer categories, clear labels, and accessible annotations that align with recommended pie chart uses. While alternatives like donut charts, waffle charts, and treemaps can address specialized visualization needs and overcome several pie chart disadvantages, one should steer clear of pie charts when precise group comparisons, complex data structures, or large datasets are involved, where other visuals are more suitable. To maximize effectiveness and truly leverage pie chart advantages, researchers should avoid 3D distortions, unnecessary colors, and non‑proportional data; follow best practices for how to create a pie chart; and always prioritize clarity and accessibility in visual design.

Pie charts show how each part contributes to a whole, ideal for visualizing proportions, while bar charts are better for comparing different categories or values across groups.

A 3D pie chart presents data with a three-dimensional look, but it distorts proportions and should generally be avoided for accurate interpretation of research data.

Pie charts are most effective with five or fewer categories; more slices can make interpretation difficult and cluttered.

Popular pie chart makers include Google Sheets, Microsoft Excel, Flourish Studio, and Tableau.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Completing a thesis is a major milestone, but turning it into a publishable research paper is a different challenge altogether. Many researchers struggle with condensing a long, descriptive thesis into a clear, concise manuscript that meets journal standards.

To help you bridge this gap, Mind the Graph along with Paperpal is thrilled to announce the next session in The AI Exchange Series – How to Transform your Thesis into a Research Paper

Dato: Thursday, November 27, 2025

Time: 8:30 AM EDT | 1:30 PM GMT | 7:00 PM IST

Language: English

Duration: 1 hour

In this session, we’ll break down the thesis-to-paper conversion process with clear, practical guidance on:

Dr. Faheem Ullah

Assistant Professor and Academic Influencer

Dr. Faheem Ullah is an award-winning academic and consultant specializing in AI and research methodologies. He holds a PhD and Postdoc in Computer Science from the University of Adelaide and has earned multiple distinctions, including two Gold Medals and a Bronze Medal. With over 40 publications in leading journals and conferences and experience supervising more than 40 undergraduate, master’s, and PhD researchers, Dr. Faheem brings deep insight into academic writing and ethical AI use.

A respected voice in the global research community, he has attracted an audience of over 300,000 followers, sharing advice on research skills, publishing, and responsible AI.

Publishing from your thesis can feel overwhelming, but the right guidance makes the process faster and more structured. This webinar will help you identify what’s publishable, avoid common mistakes, and reshape your thesis into a clear, journal-ready manuscript. Whether you’re a master’s or PhD researcher, you’ll walk away with practical steps and more confidence in your path to publication.

Don’t miss this chance to learn how to turn your thesis into a clear, compelling manuscript and take the next big step in your research journey – Register for free today!

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

AI has quietly become a co-author in modern research. From grammar checks to literature reviews, tools powered by artificial intelligence are helping academics write faster and smarter. But there’s a growing challenge — most researchers don’t disclose their use of AI, even when journals require it.

In fact, recent data shows that over 75% of authors using AI fail to mention it in their submissions. With journal policies tightening and transparency becoming a pillar of academic integrity, understanding how and when to disclose AI use has become essential.

To unpack this important topic, Mind the Graph along with Paperpal is hosting a free webinar as part of The AI Exchange Series.

Dato: Monday, November 10, 2025

Time: 1:00 PM EDT | 5:00 PM GMT | 10:30 PM IST

Language: English

Duration: 1 hour

This session will help researchers and educators navigate the grey areas of AI use in academia. You’ll learn:

Plus, join the live Q&A with our expert to get answers to your own questions about ethical AI use in research.

Professor Tina Austin

University of Southern California

Professor Tina Austin is an AI ethics consultant, biomedical researcher, and educator who helps universities and organizations adopt AI responsibly. She has taught at UCLA, USC, CSU, and Caltech in subjects ranging from regenerative medicine and computational biology to AI ethics and communication.

Tina is also the founder of GAInable.ai, a platform that empowers educators to use generative AI ethically and creatively. Recognized among ASU+GSV’s Top Leading Women in AI and as a Microsoft Innovative Educator Expert, she advises the California Department of Education and the Los Angeles AI Taskforce on responsible and inclusive AI adoption in education.

If you’re a student, researcher, or educator exploring AI in your academic writing, this session is for you. Gain the clarity and confidence to use AI tools responsibly while ensuring your work meets the highest ethical and publication standards.

Don’t miss this chance to learn directly from a global AI ethics expert and strengthen your approach to AI use in academia – Register for free today!

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.

Over the past few years, AI tools for researchers have become crucial, simplifying everything from literature discovery to manuscript writing and manuscript editing. The challenge lies in choosing the best AI tools for research that align with specific scholarly needs. Some focus on improving clarity and grammar, others on fact-checking and citation accuracy, while a few are designed to assist with overall manuscript writing and preparation. Selecting the right tool can make the difference between a paper that struggles through revisions and one that is submission ready.

In this blog, we’ll review the top 7 AI tools for research — Paperpal, Grammarly, R Discovery, Scholarcy, Scite, Claude, and ChatGPT, highlighting how they can support writing, editing, and manuscript preparation.

If you’re looking for an AI tool built exclusively for academics, Paperpal is hard to beat. Unlike generic AI research tools, it’s designed around the needs of researchers, helping you draft, rewrite, cite, and polish manuscripts with ease. Whether you’re working on a thesis, journal article, or grant proposal, Paperpal acts like an always-available mentor guiding you toward a submission-ready draft.

Fordeler:

Ulemper:

For students, PhD scholars, or journal authors, Paperpal acts as a reliable academic assistant helping you write clearly, check compliance, and submit polished manuscripts with confidence.

And if you want to pair polished writing with compelling visuals, Mind the Graph helps create professional infographics, figures, and graphical abstracts to make your research stand out.

Grammarly is an AI writing assistant that has been helping students and professionals produce clear, polished writing for over a decade. It combines grammar checking, proofreading, and style enhancements with generative AI features through Grammarly Go, which can create outlines, rewrite sentences, and even generate thesis statements. For academics, its plagiarism checker scans text against 16B+ web pages and academic databases, providing originality scores.

Fordeler:

Ulemper:

Grammarly is one of the best AI tools for research when you need a solution for everyday writing, grammar checks, and readability. However, for researchers seeking deeper academic precision, it pairs best with specialized tools.

R Discovery is an AI-powered literature discovery tool that helps researchers quickly find and understand relevant academic papers. With access to 250M+ research papers, it cuts down hours of searching by offering personalized feeds, summaries, and topic alerts. Its AI Research Assistant also helps answer questions directly from papers, making literature reviews faster and more efficient.

Fordeler:

Ulemper:

R Discovery is ideal for students, PhD researchers, and academics who need to stay updated with recent literature, discover new papers, and manage their reading list efficiently. It doesn’t write for you, but it ensures you always have the most relevant research at your fingertips—making literature reviews significantly faster and more organized.

Scholarcy is an AI-powered summarization tool that helps researchers quickly understand dense academic papers, articles, reports, and book chapters. Instead of reading page after page, Scholarcy breaks documents into clear, digestible flashcards that highlight key points, references, and even figures or tables. It’s not designed to write for you but to save time by making complex research easier to navigate.

Fordeler:

Ulemper:

Scholarcy won’t draft your manuscript, but it’s an excellent AI tool for researchers who want to speed up reading, simplify literature reviews, and keep track of references.

Scite.ai is a research evaluation tool that helps you go beyond counting citations. Instead of just showing how often a paper is cited, Scite tells you why by showing whether other researchers support, question, or simply mention the paper’s findings. This makes it an invaluable aid for critical literature reviews, identifying influential studies, and avoiding unreliable references.

Fordeler:

Ulemper:

Scite.ai acts like a quality filter for research, giving you the context behind citations so you can decide which papers to trust and which to question. For anyone conducting a systematic review or building a strong reference list, it’s one of the best AI tools for researchers today.

ChatGPT is one of the most powerful and widely used AI research tools today. While it isn’t built specifically for manuscript writing or editing, it excels at brainstorming ideas, creating outlines, simplifying complex topics, and providing instant explanations. For researchers, it can act as a 24/7 assistant helping with definitions, structuring content, or clarifying concepts.

Fordeler:

Ulemper:

ChatGPT isn’t the best choice for drafting research papers, but it’s an excellent brainstorming and learning partner. Use it to clarify concepts, generate outlines, or get quick perspectives but always verify the information with trusted academic sources.

Claude is one of the newer but fast-rising AI assistants. While it isn’t as feature-heavy, it excels at handling long, complex documents and producing natural, human-like prose. With its 200K token context window, Claude can process entire books, research papers, or lengthy reports, making it particularly valuable for academics working with large datasets or manuscripts.

Fordeler:

Ulemper:

Claude may not replace specialized AI tools for research writing but it shines when you need to analyze long documents, summarize large texts, or rewrite content with clarity. For researchers dealing with massive academic papers or thesis drafts, its extended context window is a game-changer.

While the 7 AI tools for research excel in literature search, academic writing, and editing, toools like Perplexity, Wordtune and Yomu AI are also quite popular AI tools for research among students and researchers.

Each of these tools complements the seven reviewed above, especially when used for brainstorming ideas, refining phrasing, or validating information quickly.

While AI research tools streamline writing, reviewing, and referencing, impactful research communication extends beyond text. Visuals play an equally powerful role in how your findings are understood and remembered and that’s where Mind the Graph excels.

Whether you’re creating a graphical abstract, conference poster, scientific figure, or slide deck, Mind the Graph empowers you to design visually consistent, professional-quality graphics — no design background needed.

With over 75,000 scientifically accurate illustrations, discipline-specific templates, and drag-and-drop editing, Mind the Graph helps researchers transform complex data into visuals that reviewers and readers instantly grasp. You can create everything from schematic diagrams and research workflows to molecular structures and infographics — all while maintaining journal-ready formatting standards.

With so many AI tools for researchers available today, the smartest approach is to start with clarity: what do you want the tool to do for you? If your main goal is manuscript writing or manuscript editing, an academic-first platform like Paperpal is ideal. If you need quick fact-checks, literature searches, or citation insights, options like R Discovery, Scholarcy or Scite might serve you better.

1. Define your goal: Are you drafting, editing, summarizing, or fact-checking? Match the tool to the task—Paperpal for manuscript writing and editing, Scholarcy for summaries, Scite for citation analysis, etc.

2. Start with a base tool: Many researchers begin with general purpose AI tools for researchers such as ChatGPT or Claude. These are flexible but less precise for academic work, so pair them with specialized tools like Paperpal for journal-ready writing.

3. Check features and security: Ensure tools that protect your data and integrate with your preferred workflow (MS Word, Google Docs, Overleaf). For academics, the security and confidentiality of manuscripts are non-negotiable.

4. Consider budget and value: Free plans are great for testing, but advanced AI research tools require richer features that are often available in paid tiers. Investing in Prime or Premium plans often pays off in higher-quality manuscripts and saved time.

5. Evaluate the learning curve: Some tools (like Overleaf) require more technical knowledge, while others (like Paperpal or Grammarly) are intuitive. Select one that fits your comfort and expertise.

6. Test before you commit: Almost all top AI tools for research provide a free trial or free plan that allow you to explore. Test them side by side to see which handles your writing style the best, whether their suggestions and corrections are contextually accurate, and how smoothly the interface fits your workflow.

In short: Don’t just pick the most popular AI research tool, select the one that aligns with your research stage, writing goals, technical requirements, and integrity needs. For most academics, combining a general AI tool with a specialized academic tool like Paperpal offers the best AI for research, providing productivity, precision, and trust.

Paperpal is built on these principles, offering academic-focused manuscript writing and academic editing features while keeping your work secure and original.

AI is reshaping how researchers write, edit, and prepare manuscripts. The best AI tools for research save time, elevate writing quality, and enhance productivity across every stage of the research process. Yet their value lies in how responsibly they are used—with transparency, human oversight, and a strong commitment to academic integrity. The takeaway is simple: Explore, test, and integrate AI thoughtfully into your workflow. When used wisely, AI research tools can become trusted partners, helping you focus on what matters most: advancing knowledge, achieving excellence, and driving scientific innovation.

Among the many AI tools for researchers, Paperpal stands out as an academic-first platform for manuscript writing and editing. It also offers reliable support with literature discovery, citation formatting, and submission readiness checks, which makes it a trusted all-in-one AI research assistant. R Discovery is perfect for staying updated with tailored reading recommendations and advanced features that boost productivity, while Mind the Graph empowers researchers to communicate their research findings visually. Together, these tools provide end-to-end support for researchers on the path to success; the best part is that they are available in one best-in-class AI research bundle when you buy the Mind the Graph Prime plan.

Mind the Graph is an easy-to-use visualization platform for researchers and scientists that enables fast creation of precise publication-ready graphical abstracts, infographics, posters, and slides. With 75,000+ scientifically accurate illustrations made by experts and hundreds of templates across 80+ major research fields, you can produce polished visuals in minutes — no design skills required.