Quantum computing is an emerging technology that has the potential to revolutionize the way we process information. By leveraging the principles of quantum mechanics, quantum computers can perform calculations that are infeasible for classical computers, enabling faster and more accurate solutions to complex problems. This article provides an introduction to quantum computing, exploring its basic principles and its potential applications.

What is quantum computing?

So, what is quantum computing? Quantum computing is a type of computing that uses quantum mechanical phenomena, such as superposition and entanglement, to perform operations on data. It is based on the principles of quantum mechanics, which describes the behavior of matter and energy at a very small scale, such as the level of atoms and subatomic particles.

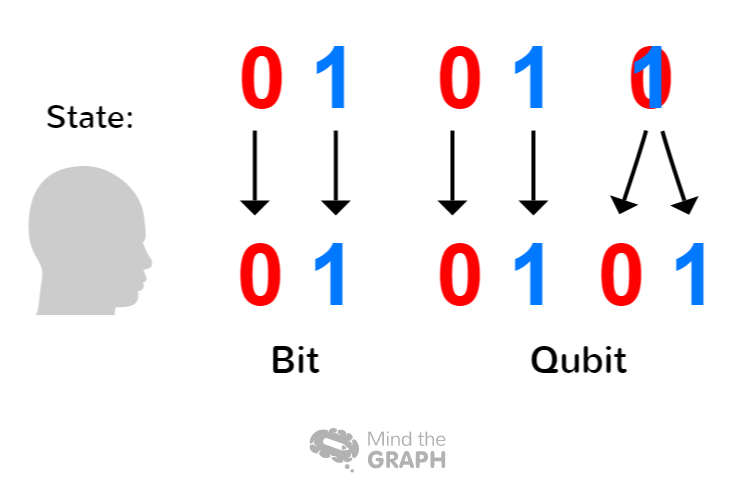

In traditional computing, the basic unit of information is a bit, which can be either a 0 or a 1. In contrast, quantum computing uses qubits (quantum bits), which can represent both 0 and 1 simultaneously, a state known as superposition. This property allows quantum computers to perform certain types of calculations much faster than classical computers.

Another important aspect of quantum computing is entanglement, which refers to a phenomenon where two particles can become linked in such a way that the state of one particle affects the state of the other, no matter how far apart they are. This property can be harnessed to create quantum circuits that perform operations on multiple qubits at the same time.

Quantum computing has the potential to revolutionize many fields, such as cryptography, chemistry, and optimization problems. However, it is still a relatively new and developing technology, and there are significant technical and practical challenges that need to be overcome before it can be widely adopted.

What is quantum theory?

Quantum theory is a fundamental theory in physics that describes the behavior of matter and energy at a very small scale, such as the level of atoms and subatomic particles. It was developed in the early 20th century to explain phenomena that could not be explained by classical physics.

One of the key principles of quantum theory is the idea of wave-particle duality, which states that particles can exhibit both wave-like and particle-like behavior. Another important concept in quantum theory is the uncertainty principle, which states that it is impossible to know both the position and momentum of a particle with complete accuracy.

Quantum theory also introduces the concept of superposition. And it has revolutionized our understanding of the behavior of matter and energy at a fundamental level and has led to numerous practical applications, such as the development of lasers, transistors, and other modern technologies.

How does quantum computing work?

Quantum computing is a highly specialized field that requires expertise in quantum mechanics, computer science, and electrical engineering.

Here is a general overview of how quantum computing works:

Quantum Bits (qubits): Quantum computing uses qubits, which are similar to classical bits in that they represent information, but with an important difference. While classical bits can only have a value of either 0 or 1, qubits can exist in both states at the same time.

Quantum Gates: Quantum gates are operations performed on qubits that allow for the manipulation of the state of the qubits. They are analogous to classical logic gates but with some important differences due to the nature of quantum mechanics. Quantum gates are operations performed on qubits that allow for the manipulation of the state of the qubits. Unlike classical gates, quantum gates can operate on qubits in superposition.

Quantum Circuits: Similar to classical circuits, quantum circuits are made up of a series of gates that operate on qubits. However, unlike classical circuits, quantum circuits can operate on multiple qubits simultaneously due to the property of entanglement.

Quantum Algorithms: Quantum algorithms are algorithms designed to be run in quantum computers. They are typically designed to take advantage of the unique properties of qubits and quantum gates to perform calculations more efficiently than classical algorithms.

Quantum Hardware: Quantum hardware is the physical implementation of a quantum computer. Currently, there are several different types of quantum hardware, including superconducting qubits, ion trap qubits, and topological qubits.

What are the principles of quantum computing?

Quantum computing is based on several fundamental principles of quantum mechanics. Here are some of the key principles that underpin quantum computing:

Superposition: In quantum mechanics, particles can exist in multiple states simultaneously. In quantum computing, qubits (quantum bits) can exist in a superposition of 0 and 1, allowing for multiple calculations to be performed simultaneously.

Entanglement: Entanglement is a phenomenon in which two or more particles can become correlated in such a way that their quantum states are linked. In quantum computing, entangled qubits can be used to perform certain calculations much faster than classical computers.

Uncertainty principle: The uncertainty principle states that it is impossible to know both the position and momentum of a particle with complete accuracy. This principle has important implications for quantum computing, as it means that measurements on qubits can change their state.

Measurement: Measurement is a fundamental part of quantum mechanics, as it collapses the superposition of a particle into a definite state. In quantum computing, measurements are used to extract information from qubits, but they also destroy the superposition state of the qubits.

Uses of quantum computing

Here are some of the potential uses of quantum computing:

Cryptography: Quantum computing can potentially break many of the current cryptographic algorithms used to secure communications and transactions. However, they could also be used to develop new quantum-resistant encryption methods that would be more secure.

Optimization problems: Many real-world problems involve finding the optimal solution from a large number of possible solutions. Quantum computing can be used to solve these optimization problems more efficiently than classical computers, enabling faster and more accurate solutions.

Material science: Quantum computing can simulate the behavior of complex materials at a molecular level, enabling the discovery of new materials with desirable properties such as superconductivity or better energy storage.

Machine learning: Quantum computing can potentially improve machine learning algorithms by enabling the efficient processing of large amounts of data.

Chemistry: Quantum computing can simulate chemical reactions and the behavior of molecules at a quantum level, which can help to design more effective medical drugs and materials.

Financial modeling: Quantum computing can be used to perform financial modeling and risk analysis more efficiently, enabling faster and more accurate predictions of financial outcomes.

While these are just a few examples, the potential applications of quantum computing are vast and varied. However, the technology is still in its early stages and many challenges need to be overcome before it can be widely adopted for practical applications.

Find the best scientific Illustrations for your research

Mind the Graph is a web-based platform that offers a wide range of scientific illustrations to help researchers and scientists create visually appealing and impactful graphics for their research papers, presentations, and posters. With an extensive library of scientifically accurate images, Mind the Graph makes it easy for researchers to find the perfect illustrations for their work.

Subscribe to our newsletter

Exclusive high quality content about effective visual

communication in science.